In an era where Generative AI, natural language processing, and machine learning are reshaping business operations, leveraging LLMs (Large Language Models) is no longer optional — it’s strategic. But many organizations hesitate because LLM adoption seems complex, costly, and opaque. This blog will guide you through LLM development services with transparent pricing, showing how you can accelerate growth while maintaining budget clarity, security, and scalability.

Understanding LLM Development Services

What Are LLM Development Services?

Large Language Models (LLMs) are transformer neural networks trained on massive amounts of text (and even more recent multimodal data) to perform tasks such as text summarization, question answering, classification, and content creation. (Wikipedia)

When we discuss LLM development services we mean the totality of work required to move from a general-purpose model to a production-grade AI solution. The work can include:

- Model training / pretraining: Building or extending an existing base transformer model (examples: GPT, BERT, PaLM, LLaMA)

- Fine-tuning / custom LLM: Adapting an existing base model with data from a domain (examples healthcare records, legal records, transcripts of calls)

- Deployment & hosting: APIs, cloud, on-device, hybrid deployment

- Integration with enterprise systems: CRM, ERPs, knowledge bases, chatbots, workflow tool

- Monitoring, iteration, A/B testing

- Guardrails, compliance checks, security protocols, data governance

Ultimately, LLM development is also about more than simply "making an AI talk" — it’s about making AI a useful, usable, and sustainable part of your business ecosystem.

Importance of LLMs in Modern Business

LLMs and Generative AI are rapidly transforming industries. The global Large Language Model market was estimated at USD 5,617.4 million in 2024 and is projected to expand at a CAGR of ~36.9% to $35,434 million by 2030. (Grand View Research) Other projections estimate similar growth trajectories. (MarketsandMarkets)

Several trends validate this momentum:

- In 2024, the chatbots and virtual assistant application led the LLM market segment, accounting for ~26.8% share. (Grand View Research)

- Over 61.7% of developers now have or plan to launch LLM-based apps in production within a year. (Arize AI)

- In U.S. surveys, ~40% of adults reported using generative AI tools, and many use them daily at work. (Federal Reserve Bank of St. Louis)

- According to a 2025 Elon University survey, 34% of U.S. adults use an LLM at least once a day; 72% said they used ChatGPT, 50% Google’s Gemini, and 9% Claude. (Elon University)

Case Example (Healthcare):

A hospital system deployed a custom LLM fine-tuned on medical records, enabling clinical insights, automated summarization, question answering over patient history, and sentiment analysis of patient feedback.

The result: reduced physician documentation time by 30%, improved triage in customer support channels, and safer diagnostic suggestions via guardrail-enforced outputs.

Case Example (Retail / E-commerce):

An online retailer integrated an LLM into its customer support chatbots, combined with recommendation systems and workflow automation. The system parsed sales call recordings, customer interactions, and meeting transcripts, assisting support agents, improving conversion, and enabling content generation for marketing campaigns.

These examples show how LLMs can deliver real ROI when embedded via LLM development services.

Benefits of Partnering with LLM Development Service Providers

Expertise and Specialization

Organizations frequently choose to work with specialized LLM development firms (e.g. BluEnt, TokenMinds, SparxIT), or use large language models offered by other platforms including but not limited to OpenAI, Microsoft, Hugging Face, Rasa, etc.

These suppliers offer:

- Domain experience in AI development, deep learning, transformers, MLOps, and natural language understanding

- Proven E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness)

- Pre-built modules (e.g. Retrieval-Augmented Generation, few-shot learning, agentic AI)

- Access to specialized talent that can assist in areas such as prompt engineering, model tuning, security, and regulatory compliance

Many companies now, for example, wrap OpenAI GPT-4 or fine-tune Llama 2, Claude, Gemini, or PaLM in their own proprietary stacks and/or technology stacks. This is advantageous because they have the latest base architecture and are able to customize the model on a domain specific.

Scalability and Customization

With many LLM service suppliers, it is possible to scale from pilot to enterprise-grade offering by practicing:

- Modular architectures: Can switch from on-premise deployment to a cloud-base deployment (or a hybrid deployment), or an edge deployment

- Flexible deployment modes: Can support in-context learning, few-shot learning, or full fine-tuning deployments

- Horizontal scaling: Can quickly support spike in usage, high concurrency, &/or a seasonal load

- Integrations with other office tech: integrations with CRMs, ERPs, internal knowledge bases, knowledge tools, & workflows

- Custom features: classification, sentiment analysis, text summarization, prompt chaining, A/B testing, predictive modeling, and recommendation systems

In growth phases, this kind of flexibility is vital — you don’t want to outgrow your architecture mid-project.

Transparent Pricing Models in LLM Development

Understanding Different Pricing Structures

When evaluating LLM development services, transparent pricing is a competitive differentiator. Common models include:

In contrast to opaque pricing, transparent pricing has these hallmarks:

- A clear component breakdown (e.g. model training, fine-tuning, API, infrastructure, support)

- Defined rate cards, overage fees, and scope boundaries

- Escalation & discount rules as usage grows

- Auditability and cost visibility for the client

Transparent pricing gives you control over budgets and avoids nasty surprises.

The Value of Transparent Pricing for Businesses

Transparent pricing offers several tangible advantages:

- Builds trust and fosters long-term partnerships

- Makes budgeting easier — especially when aligning with finance teams and executives

- Enables benchmarking (you can compare rates across providers)

- Encourages efficiency — service vendors have to optimize rather than hide costs

In surveys, clients of transparent-pricing AI service firms often report higher satisfaction and fewer budget overruns. (While I was unable to find a peer-reviewed study specifically on LLM service transparency, the general trend in cloud services and APIs strongly supports this conclusion.)

By making cost structures explicit, providers force accountability: when you see how much “model training” versus “fine-tuning” versus “infrastructure” costs, you can negotiate, optimize, or experiment.

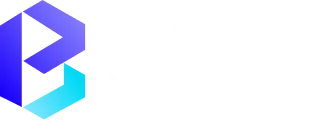

Key Considerations When Choosing LLM Development Services

Evaluating Vendor Expertise

Request that every prospective LLM development business showcase:

- Past case studies within your industry vertical (ex: healthcare, retail, legal, gaming)

- Utilization of models such as GPT-4, LLaMA, Claude, PaLM, Gemini, or Qwen3

- Ability to negotiate data governance, security protocols, regulatory compliance, and local market nuance (Asia Pacific, North America, Europe)

- Customer referral history and customer support experience; scalability of LLM, model training, fine-tuning, deployment, and maintenance

- Their team has skills in MLOps, prompt engineering, LLM development, transformers, deep learning, natural language understanding, and AI ethics and fairness/guardrails

- Change Management expertise with use of AI infrastructure and cloud providers (e.g. AWS, Microsoft Azure, Google Cloud, Alibaba Cloud), on-chain or web3 components (if relevant)

- Transparency in Budgets and pricing breakdowns, governance review

Again, given that large language models will come with risk (bias, hallucination, security), it’s essential your partner be trusted within areas of data security, compliance checks, and contractual buy-side due diligence.

Assessing the Project Scope and Timeline

To define a project well and negotiate transparently:

- Identify use cases first (e.g. customer service chatbots, legal documents summarization, clinical insights, email responses, marketing content generation)

- Specify input data types (structured CRM, transcripts, social media comments, clinical notes, documents)

- Determine expected output (classification, summarization, question answering, recommendation)

- Plan integration points (APIs, CRMs, ERPs, knowledge bases)

- Build Milestones & deliverables: POC → pilot → full rollout → iteration

- Set realistic timelines but allow flexibility for iteration, bug fixes, guardrail tuning

- Include A/B testing, feedback loops, and metrics (accuracy, latency, throughput, error rates)

Leave room for scope creep (e.g. adding new data sources, new languages, new modalities), but tie that to clear pricing increments.

Future Trends in LLM Development

Evolving Technologies and Techniques on the Horizon

The LLM space continues to evolve rapidly. Watch for:

- Federated learning / on-device fine-tuning to keep data local (improving data security)

- Multimodal models (text + image + audio) merging computer vision, object detection, image recognition, document extraction

- Agentic AI that can autonomously chain tasks (e.g. fetch, reason, act)

- Retrieval-Augmented Generation (RAG) with knowledge bases and component modules

- In-context learning and few-shot learning becoming more powerful, reducing the need for full retraining

- New architectures beyond transformers (e.g. state-space models, mixture-of-experts)

- Stronger guardrails, explainability, AI ethics, bias mitigation, audit trails

- Tighter regulatory oversight, especially in healthcare, finance, legal, Europe, Asia Pacific

These trends push providers to stay ahead — meaning your development partner must stay agile.

Predictions for Market Growth and Strategy

The LLM-powered tools market is also surging, with North America commanding ~35.2% share.For businesses, that means:

- The Enterprise LLM Market will become increasingly competitive

- Clients will demand transparent pricing, scalability, AI-powered solutions with guarantees

- Vertical specialization (e.g. healthcare LLM, legal LLM, e-commerce LLM) will dominate

- Countries and regions (North America, Europe, Asia Pacific) will see local market nuances, regulatory pressures, and localization demands

- Open models like LLaMA, Llama 2, Claude, Gemini, Qwen3 will encourage more custom LLM development (versus black-box models)

To prepare, organizations should start small (pilot), validate ROI, and then scale — while building internal capabilities and governance.

Strategies for Market Growth

- Invest in Custom LLM Development

Build domain-specific LLMs (healthcare, fintech, legal, etc.) to deliver targeted performance, compliance, and faster ROI. - Adopt Transparent Pricing Models

Offer clear pricing tiers based on usage, compute cost, and feature sets — fostering client trust and long-term partnerships. - Focus on Scalability and Integration

Develop modular LLM systems that can easily integrate with enterprise tools like CRMs, ERPs, and BI platforms. - Enhance Data Governance and Compliance

Implement regional compliance frameworks (GDPR, HIPAA, etc.) to meet local regulations and attract global clients. - Leverage Open-Source Models

Utilize open frameworks like LLaMA, Qwen3, or Claude for cost-effective customization, transparency, and innovation. - Invest in Fine-Tuning and RAG Models

Use Retrieval-Augmented Generation (RAG) to combine domain data with pre-trained LLMs for higher contextual accuracy. - Strengthen AI Explainability and Ethics

Create interpretable models with ethical guidelines to enhance user trust and meet enterprise governance needs. - Develop AI-as-a-Service Platforms

Package LLM solutions as subscription-based APIs or platforms for easy adoption by startups and SMEs.

Growth Opportunities

- Vertical Expansion – Build LLMs for specialized industries like legal tech, healthcare diagnostics, or financial risk analysis.

- Regional Market Penetration – Adapt models to local languages, regulations, and data availability in emerging markets.

- Partnership Ecosystems – Collaborate with cloud providers, consulting firms, and universities to expand reach and credibility.

- Continuous Learning Systems – Enable self-improving models that evolve with user feedback and real-time data streams.

- AI Consulting and Training – Offer strategy consulting and hands-on workshops to help clients adopt LLM technology efficiently.

Conclusion

Investing in LLM development services with transparent pricing is one of the smartest ways for businesses to accelerate growth in the age of artificial intelligence. A well-chosen partner helps you navigate model training, fine-tuning, deployment, integration, and monitoring — all while maintaining clarity around budgets, scalability, and governance.

By understanding the key tradeoffs, assessing vendor capabilities, and staying attuned to future trends (from agentic AI to multimodal models), your organization can confidently adopt custom LLM, chatbots, sentiment analysis, classification, recommendation systems, or predictive modeling with minimal risk. Ready to build your custom LLM solution? Connect with BNXT.ai to explore scalable, secure, and transparently priced AI development services

People Also Ask

What industries benefit from LLM development?

LLM solutions are used across finance, healthcare, e-commerce, education, real estate, and more — wherever data-driven automation and personalization are key.

What does “transparent pricing” mean in LLM services?

Transparent pricing means no hidden costs — you pay for clear deliverables such as data preparation, model training, deployment, and ongoing support, with upfront cost breakdowns.

Can LLMs be integrated with existing systems?

Absolutely. LLMs can be integrated into CRMs, ERPs, chatbots, and analytics platforms through APIs or custom connectors.

What is the typical timeline for LLM implementation?

Depending on complexity, projects usually take 4–12 weeks, including data preparation, model training, integration, and testing.

.webp)

.webp)

.webp)