Artificial intelligence is reshaping our work, decision making, and interaction with technology. If you want to build intelligent systems to improve your operations or automate your processes, LangChain is one of the best frameworks available.

LangChain enables both developers and businesses to tap into one of the most powerful potential applications of artificial intelligence with relation to either an AI application and workflow automation.

This guide is designed to give both enthusiastic novices and experienced developers a working knowledge of LangChain and how to apply it effectively.

What Is LangChain and Why It Matters in AI Workflows

What is LangChain? In simple terms, LangChain is a Python framework designed to help developers connect large language models (LLMs) with external systems, APIs, and databases. It allows you to build complex workflows where AI models, data sources, and automation tools interact seamlessly.

Why is LangChain important for workflow automation tools and enterprise workflow automation? Modern businesses deal with vast amounts of data and repetitive processes. LangChain enables:

- Automation of tasks such as document handling and customer queries

- Building AI systems that are more responsive and intelligent

- Efficient orchestration of linear workflows and branching paths

One common question is: “Is LangChain open source?” Yes, it is open-source, which means developers can contribute, extend, and adapt it for various AI applications.

Exploring LangChain’s Core Features and Applications

Introduction to langchain: LangChain is a framework designed to make AI workflows smarter and more efficient. Its core features, chains, agents, memory management, and data integrations allow developers to create complex, automated processes with minimal effort.

Chains define the flow of AI tasks, while agents can make decisions, fetch data, and interact with APIs autonomously. Memory management ensures context-aware responses, making applications like chatbots more intelligent.

Integration with external data sources and vector databases enables real-time information retrieval. Prompt templates and the Prompt Hub standardize inputs across workflows, improving consistency and reliability. Together, these features make LangChain ideal for building applications like text summarisation, decision-making systems, and AI-driven data analysis tools.

A clear understanding of LangChain applications starts with its core features:

- Chains & Dynamic Chains – These define the order of operations where outputs from one AI model feed into the next. This creates a LangChain flow and supports both linear workflows and complex workflows.

- Agents & LangChain agents – Autonomous AI programs capable of retrieving data, making decisions, and interacting with APIs. There are multiple types of AI agents, including open AI agents and those extended via AI agents plugin.

- Memory Management & Conversational Memory – LangChain can remember previous interactions using memory systems, crucial for chatbots, customer support chatbot, and other context-aware AI tasks.

- Data & Vector Database Integration – Connect to external data sources, vector stores, and APIs like Hugging Face, Yahoo Finance News, or Open Weather Map for real-time information retrieval.

- Prompt Templates & Prompt Hub – Easily define input prompts for your AI models, ensuring consistency across multiple workflows.

With these features, LangChain is ideal for building text summarisation, decision-making systems, legal document reviews, and other AI development projects.

Practical LangChain Use Cases and Benefits

LangChain enables AI workflow automation solutions that streamline complex processes, reduce manual effort, and enhance decision-making. For larger organisations, Enterprise LangChain implementation helps integrate AI seamlessly into existing systems, driving efficiency, scalability, and smarter business operations.

LangChain’s versatility shines through its AI applications:

- LangChain Chatbot Example – Build intelligent chatbots that leverage conversation memory to provide consistent and personalized responses. Examples include customer support bots and interactive knowledge assistants.

- Document Workflow Automation – Automatically read, summarize, and route documents, reducing manual effort. Integrating a vector store allows semantic searches across large datasets.

- Data Processing Pipelines – Automate data retrieval and processing using tools like retriever tool, SingleStore Helios, or SingleStore Notebook.

- Visual Workflows & LangChain Graph – Use a visual interface to design workflows, monitor progress, and manage dynamic chains.

The benefits include:

- Increased efficiency and productivity

- Reduced human error

- Scalable AI solutions for enterprise workflow automation

LangChain Framework and Ecosystem Overview

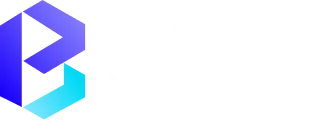

The LangChain framework is supported by a rich ecosystem that ensures developers have everything they need:

- LangChain company and LangChain website provide official resources, tutorials, and updates.

- LangChain app and LangChain hub make workflow management and LangChain orchestration easier.

- Integration with external systems, APIs, and popular AI platforms like HuggingFace API and Google Gemini.

- Tools for memory management, vector embeddings, Agent Scratch Pad, and agent tools.

This ecosystem makes it possible to build sophisticated AI workflows without reinventing the wheel.

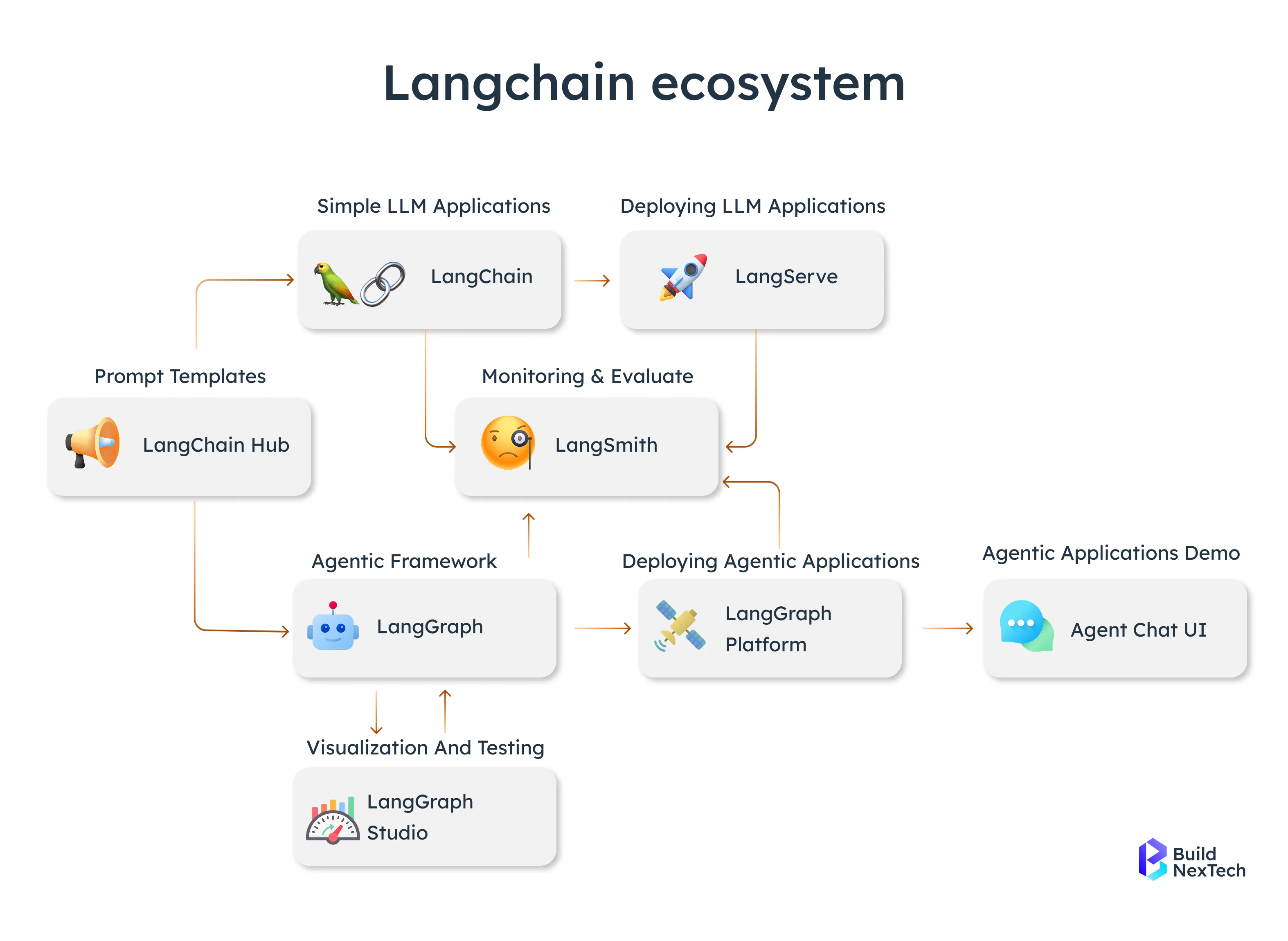

Step-by-Step Guide to Using LangChain for AI Workflows

Creating AI workflows with LangChain can seem complex at first, but by breaking the process into clear, manageable steps, even beginners can build powerful, automated systems. This comprehensive guide walks you through each stage—from setting up your environment to deploying scalable AI solutions.

With the increasing demand for LLM and LangChain integration services, developers can now connect large language models (LLMs) to real-world applications seamlessly. This allows for context-aware automation, intelligent decision-making, and efficient AI-driven workflows that enhance productivity and business intelligence.

⚙️ Environment Setup

The first step in building a LangChain workflow is preparing your environment.

Install LangChain using pip:

pip install langchain- Ensure you have valid API keys for your chosen AI models (like OpenAI, Anthropic, or Cohere).

- Set up dependencies such as langchain-community or vector stores (like FAISS, Pinecone, or Chroma) depending on your workflow needs.

A properly configured environment guarantees that your workflow runs smoothly, avoiding model connection or dependency issues later in development.

🧠 Define Your Workflow

Once your environment is ready, plan the logical structure of your workflow. This involves deciding:

- What tasks your AI needs to perform

- How data flows between those tasks

- Whether the flow is linear (simple input → output) or branching (conditional logic, multi-step reasoning)

Here’s a basic Python example:

from langchain.chains import SimpleChain

workflow = SimpleChain(steps=["input_processing", "ai_response", "output_handling"])By clearly defining this structure early, you prevent bottlenecks and make it easier to debug or expand later.

🤖 Integrate AI Models

LangChain’s strength lies in how easily it integrates LLMs and generative AI models.

These models are the brain of your workflow—they handle natural language understanding, reasoning, and content generation.

Example:

from langchain.llms import OpenAI

llm = OpenAI(api_key="YOUR_API_KEY")

response = llm("Summarize this document")Through LLM integration, your workflow gains the ability to analyze, summarize, or generate intelligent outputs automatically.

🪄 Add AI Agents

AI Agents make your LangChain workflow truly autonomous. Agents can retrieve data, call APIs, perform calculations, and make decisions—all without manual input.

For instance:

from langchain.agents import initialize_agent, Tool

tools = [Tool(name="SearchAPI", func=search_function)]

agent = initialize_agent(tools, llm)

agent.run("Find the latest AI news")These LangChain Agents interact dynamically with their environment, turning static workflows into intelligent, task-oriented systems.

📝 Use Prompt Templates

Prompt Templates standardize how you communicate with models, ensuring consistent results.

They’re especially useful for workflows with recurring tasks, like text generation or summarization.

Example:

from langchain.prompts import PromptTemplate

template = PromptTemplate(

input_variables=["topic"],

template="Write a short summary about {topic}"

)

output = llm(template.format(topic="LangChain AI workflows"))This makes your workflow modular, reusable, and easier to debug or adapt for new topics.

🧬 Memory and Context Management

LangChain supports different memory modules that allow your AI to remember previous interactions—essential for chatbots, recommendation systems, or decision-making applications.

Example:

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory()

memory.save_context({"input": "Hello"}, {"output": "Hi there!"})By managing context effectively, your workflow can maintain continuity in long conversations and deliver coherent, personalized responses.

🔄 Orchestrate Tasks

Once your workflow components are in place, use LangChain’s orchestration features to define task sequences and data dependencies.

Example:

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory()

memory.save_context({"input": "Hello"}, {"output": "Hi there!"})This orchestration ensures that each task executes in the correct order, data flows efficiently, and the entire pipeline remains scalable and maintainable.

🧪 Test and Iterate

Before deployment, thorough testing is essential. LangChain provides visualization tools (like graph views) to help you debug and optimize your workflow logic.

Example:

workflow.visualize_graph()

workflow.run_test_cases()Testing helps you catch inefficiencies, refine agent behavior, and ensure that your workflow delivers reliable, accurate results.

☁️ Deploy and Scale

Finally, deploy your workflow in a production environment. This could involve:

- Integrating with cloud platforms (AWS, Azure, GCP)

- Using LangServe or FastAPI for API deployment

- Scaling with containerization tools like Docker or Kubernetes

Example:

workflow.deploy(environment="cloud")A well-deployed LangChain workflow can handle large datasets, multiple concurrent users, and complex business logic, making it ideal for enterprise AI and automation applications.

Integrating LangChain with AI Agents for Workflow Automation

Integrating LangChain with AI agents enables seamless AI-driven automation across complex business workflows. By connecting multiple AI models, APIs, and data sources, LangChain helps AI agents communicate, reason, and execute tasks automatically. This integration streamlines workflow automation, reduces manual intervention, and enhances decision-making efficiency—making it a powerful solution for businesses aiming to scale their AI and automation capabilities.

Understanding what are AI agents is crucial. AI agents are programs capable of autonomous actions based on user query inputs. Some types include:

- Reactive Agents – Act based on immediate inputs

- Deliberative Agents – Plan and make decisions over multiple steps

- Open AI Agents – Connect external tools, APIs, and services

- Agentic AI & Agent Tools – Advanced agents capable of self-directed tasks

Integrating LangChain with AI agents allows:

- Customer Support Bots to provide real-time assistance

- Legal Document Reviews using text summarisation

- Accessing external data sources like Google Maps, Reddit Search, or Tavily Search

- Automation in enterprise workflow automation and document workflow automation

The synergy of LangChain and AI agents transforms ordinary processes into intelligent, self-managing systems.

Future Trends and Innovations with LangChain AI

Future-ready businesses are increasingly investing in custom LangChain chatbot development to create intelligent, context-aware chatbots that enhance customer interactions and automate support through advanced AI workflows.

To bring these innovations to life, many organisations choose to hire LangChain AI developers who specialize in building scalable, data-driven automation systems, integrating LLMs, and optimizing workflow performance for enterprise-grade AI solutions.

The AI landscape is rapidly evolving, and LangChain is at the forefront of innovation:

- Agentic AI and AI development – Smarter, autonomous agents capable of handling complex workflows

- Enhanced LangChain chatbot capabilities with conversational memory and feedback mechanisms

- Integration with Hugging Face, Google’s PaLM, and Google Gemini for richer AI experiences

- Growing adoption of LangChain Expression Language for better decision logic and procedural code

These advancements make LangChain a cornerstone of modern AI workflow automation, ensuring businesses stay competitive in the era of Generative AI.

Conclusion

LangChain is more than a framework—it’s a powerful platform for building intelligent AI systems and automating complex workflows. By combining AI agents, memory management, and robust workflow automation tools, organisations can streamline processes, reduce errors, and unlock new business opportunities.

From creating a LangChain chatbot example to implementing data processing pipelines and visual workflows, the possibilities are endless. With an active ecosystem, open-source access, and integration with cutting-edge AI platforms, LangChain ensures you stay ahead in the ever-changing AI landscape.

People Also Ask

How does LangChain support multi-language or non-English AI workflows?

LangChain works with multilingual AI models and supports non-English prompts, enabling localized and global workflows.

Can LangChain be used for AI-driven analytics and insights beyond automation?

Yes, it can analyze, summarize, and generate insights from complex data using AI agents and chains.

What security measures does LangChain offer for sensitive workflow data?

LangChain offers encrypted API keys, secure memory storage, and role-based access to protect sensitive data.

How can LangChain integrate with low-code or no-code platforms?

It integrates via APIs or SDKs with platforms like Zapier, Power Automate, and Bubble for low-code workflows.

.webp)

.webp)

.webp)