Developers, Architects, and businesses need to make one of the most significant architectural decisions they will ever face when developing, Deploying, Managing, and Scaling applications; with cloud platforms evolving rapidly and the rise of cloud-native applications, more businesses are moving towards the development of Cloud-Native applications and cloud-native services. Selecting which of the two most common Deployment Models for Cloud Applications - Serverless (Event-Driven) or Container-Based - will have a major impact on the Cost, Scale, Control Level of Operations, and Speed of Application Development for most businesses.

BuildNexTech can help businesses analyze their Cloud Architecture options, determine the right Cloud Architecture decision for their Business Requirements and Technical Requirements, and identify which deployment models are best suited for each business's long-term success.

✨ Key Insights from This Article:

☁️ Serverless vs containerized deployments: choosing the right cloud-native model impacts cost, scale, and speed

⚡ Serverless excels in event-driven apps, APIs, spiky workloads, and rapid development with minimal ops

🛠️ Containers provide portability, control, and predictable performance for long-running or stateful apps

🎯 Decision framework: select deployment based on workload type, business goals, and technical constraints

💡 Hybrid solutions can combine serverless and containers for maximum flexibility and efficiency

Introduction: Navigating the Cloud Deployment Landscape

Companies that develop software in the cloud can do so much faster and with greater flexibility than ever before. Businesses no longer just have to manage physical servers and software platforms; with cloud-native application development, they can create new applications and services at an unprecedented pace while taking advantage of improved scalability and optimised utilisation of all types of IT resources. The growth of cloud-native applications is growing beyond most businesses' capability to handle

- Organizations are moving away from traditional IT infrastructure development.

- Cloud deployment models now support global scalability and agility

- Serverless and containerized deployments dominate modern software deployment strategies

From Traditional Infrastructure to Cloud-Native Choices

The complexity of operations associated with virtual machines and manual management has traditionally made deploying software difficult. However, the advent of cloud-native methodologies now offers new ways to abstract infrastructure management for application development and deployment processes.

- Shift from host operating system dependency to cloud-managed services

- The adoption of microservices architecture and automated deployment in cloud computing

The adoption has led to an increased focus on scalability, security, and compliance during the evolution of an organization’s cloud environment. Without a doubt, the adoption of serverless computing and containerization is the most common way to deploy your applications to the cloud today.

Understanding Serverless Deployments

The concept of serverless deployments completely hides the issue of infrastructure management and lets the team only write application code. With a serverless environment, cloud service providers automatically manage scaling, provisioning, and runtime environments.

- No need to manage servers or operating systems

- Functions execute in response to events or HTTP requests

- Pay-per-execution billing reduces idle resource costs

Increasingly common use cases for serverless architecture as an architectural style / Design Methodology (e.g., building RESTful APIs, for processing data in response to events, etc.), where speed & agility are critical; also, it creates opportunities to build scalable solutions with serverless designs.

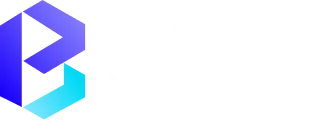

What Serverless Computing Is and How It Works

Function-as-a-Service (also referred to as Serverless computing) is a serverless computing system in which functions are executed without a persistent server only when invoked. Short-lived functions: Distributed functions like AWS Lambda, Azure Functions, and Google Cloud Functions run short-lived functions without long-lasting infrastructure.

- Functions respond to API calls, data streams, or database changes

- Automatic scaling based on incoming requests

- Cloud vendor manages the runtime environment and security

This method allows for very fast development of applications while reducing the amount of operational burden on the company, thus making it the best approach for cloud-native development.

Key Benefits: Auto-Scaling, Pay-Per-Use, and Reduced Operations

Simplification in the operation and cost effectiveness is the greatest benefit of serverless computing. The Overhead Required to Handle Peak Traffic Will Not Burden Businesses

- The Auto-Scale Feature Automatically Scales Resources According to Unpredictable Workloads

- The Pay-Per-Execution Model Provides Clearer Visibility Into Costs

- Reduced infrastructure management accelerates development cycles

At BuildNexTech, we see serverless architectures delivering strong ROI for startups and enterprises handling spiky traffic, APIs, and short-lived tasks.

Understanding Containerized Deployments

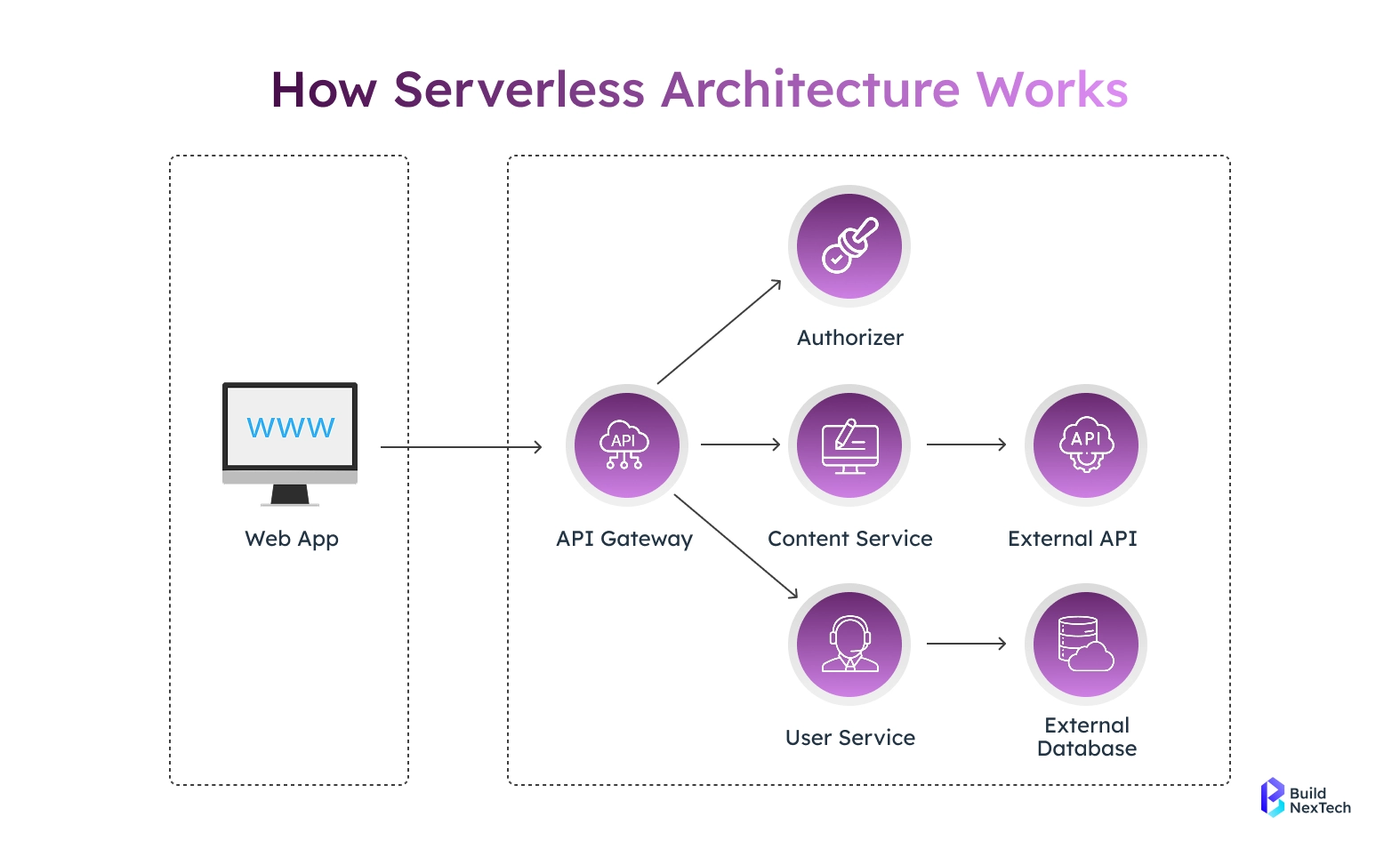

Containerized applications are applications that are written in a lightweight container image along with their dependencies. Technologies such as Docker containers and Kubernetes orchestration engines enable consistency across the development and production environments.

- Containers run on shared host system kernels

- Applications remain isolated and portable

- Widely used in container-based architectures

Containerization is a foundational pillar of modern microservices and hybrid architecture strategies, forming reliable containerization solutions for cloud applications.

What Containerization Is and Why Teams Use It

Containerization allows teams to bundle application code, libraries, and configurations into a single container image. This ensures predictable behavior across environments.

- Dockerfile defines the application runtime setup

- Container registry stores versioned images

- CI/CD pipelines automate containerized deployments

This approach is favored by teams needing granular control over runtime environments and deployment strategies.

Strengths of Containers: Control, Portability, and Predictability

The mobile and uniform capabilities of containers are unmatched when they are deployed in a hybrid/multiple cloud and Cloud Agnostic infrastructure.

- Enterprise-grade Kubernetes Clusters manage all aspects of cluster scaling and orchestration, allowing organisations to deploy Enterprise Kubernetes solutions easily.

- Container orchestration tools enable rolling updates.

- Strong support for persistent storage and stateful applications

When it comes to complex applications or extended Working Hours, Containerized applications will be the most dependable options.

Serverless vs Containerized Deployments: Key Differences

Although both serverless and containerized deployments support cloud-native application development, they differ fundamentally in how infrastructure, scaling, and execution are handled. These differences directly affect deployment strategies, cost structures, operational effort, and long-term scalability for modern applications.

- Serverless computing removes direct interaction with servers and runtime management.

- Containerized deployments rely on container images and orchestration layers

- Each approach optimizes resource utilization in different ways.

Understanding these contrasts helps organizations select an architecture that aligns with workload behavior, performance expectations, and operational maturity.

Comparing Serverless vs Containerized Deployments: Key Features

Architecture, Scaling, Cost, and Operational Comparison

From an architectural perspective, serverless architectures execute application code as discrete serverless functions, while containerized applications run continuously inside Docker containers. Scaling also differs significantly between the two models.

- Serverless platforms provide automatic scaling per request using auto-scaling mechanisms.

- Containers scale through orchestration tools such as Kubernetes and Docker Swarm.

- Serverless uses pay-per-execution billing, while containers require capacity planning.

Operationally, serverless reduces infrastructure management, whereas containers offer greater control over the runtime environment and resource allocation.

When Serverless Is the Better Choice

Serverless is best suited for scenarios where flexibility, speed, and cost efficiency outweigh the need for deep infrastructure control. It enables teams to focus on application development without worrying about server provisioning or orchestration platform complexity.

- Ideal for dynamic and unpredictable workloads

- Reduces operational overhead and maintenance effort

- Supports rapid experimentation and iteration

For organizations prioritizing agility, serverless often delivers faster results with lower operational risk.

Event-Driven Workloads, Spiky Traffic, and Rapid Development

Event-driven systems are a natural fit for serverless environments, where functions execute only in response to specific triggers. This model handles sudden traffic increases without manual intervention.

- Responds efficiently to HTTP requests and API calls

- Scales instantly during traffic spikes

- Eliminates idle infrastructure costs

These characteristics make serverless highly effective for APIs, data streams, and Internet of Things use cases.

Background Processing, APIs, and Short-Lived Tasks

Short-lived and asynchronous tasks benefit significantly from serverless execution models. These workloads typically do not require persistent storage or long-running processes.

- Background jobs execute independently.

- API endpoints integrate seamlessly with API Gateway

- Monitoring and logging tools provide real-time visibility

Serverless computing ensures optimal resource utilization while maintaining performance consistency for transient workloads.

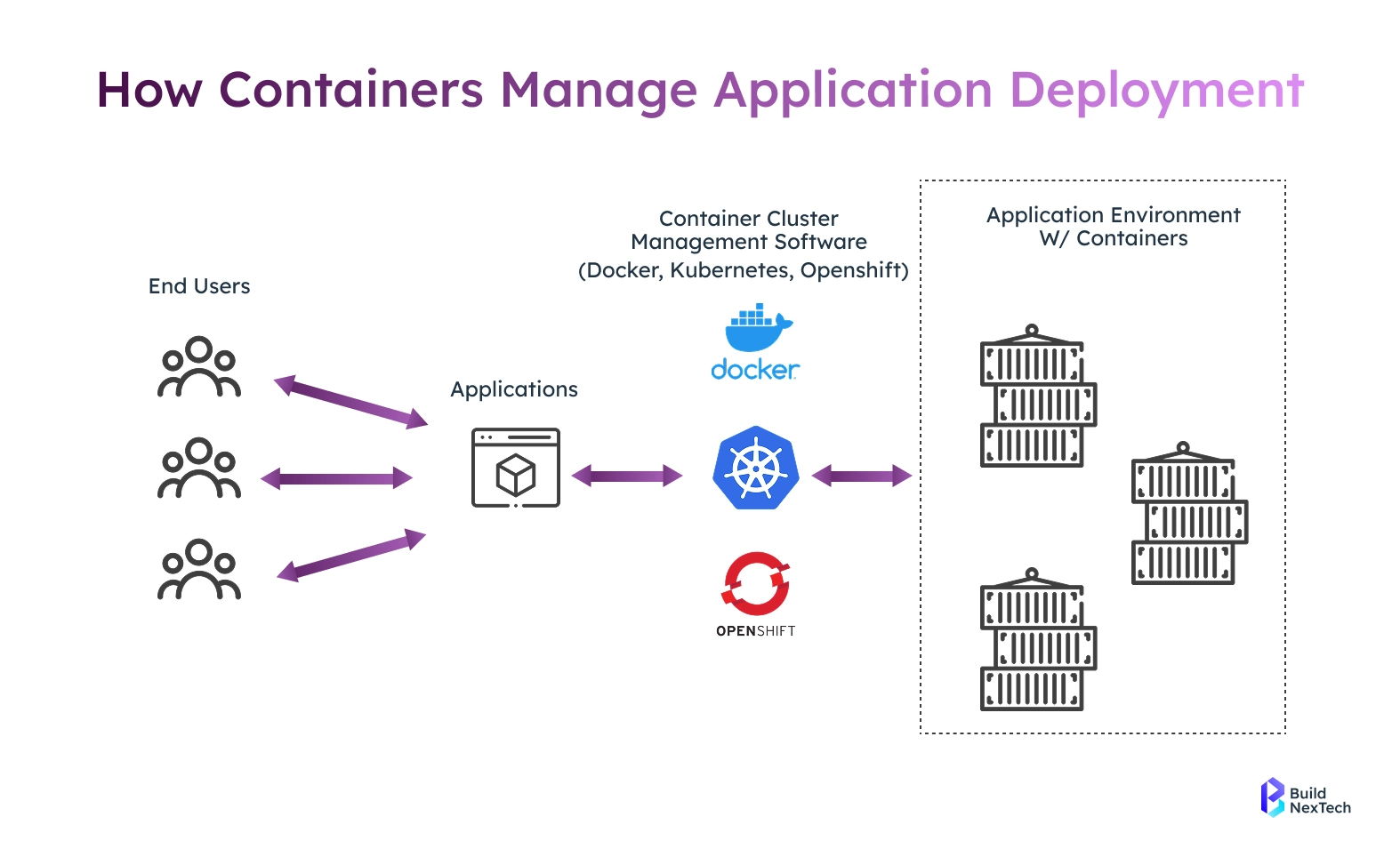

Making the Right Deployment Choice: Trade-Offs and Decision Framework

Selecting the right deployment model requires a structured evaluation of technical constraints, business objectives, and long-term scalability requirements. Both serverless and containerized deployments present trade-offs that must be carefully considered.

- Analyze workload duration and execution pattern.s

- Assess security, compliance, and vulnerability concerns

- Evaluate development lifecycle and CI/CD pipelines

A clear decision framework helps organizations avoid overengineering or underutilization.

Balancing Workloads, Business Goals, and Serverless Trade-Offs

While serverless simplifies deployment workflows, it introduces considerations such as cold starts, execution limits, and vendor lock-in. Containers, by contrast, provide more predictable runtime behavior.

- Containers support persistent storage and stateful services

- Serverless accelerates deployment workflows

- Hybrid architecture combines both models effectively

At BuildNexTech, we often recommend hybrid solutions that balance scalability, control, and operational efficiency based on real-world usage patterns.

Conclusion: Making Serverless a Strategic Advantage

As cloud-native application development has evolved, serverless computing has made software deployment easier by abstracting away the complexity associated with infrastructure. The automatic scaling and consumption-based pricing of serverless computing make it ideal for developing new types of event-driven applications in the cloud.

Serverless computing has become a powerful component of modern cloud architecture, particularly for scalable, event-driven, and API-centric applications. While containerized deployments remain essential for complex systems and long-running workloads, serverless offers unmatched simplicity and elasticity.

- Faster deployment with reduced infrastructure management

- Improved cost efficiency through pay-per-execution billing

- Strong alignment with cloud-native practices

By partnering with BuildNexTech, organizations can confidently design and deploy serverless solutions and cloud-native solutions that support long-term growth, resilience, and innovation.

People Also Ask

Can serverless and containerized deployments be used together in the same architecture?

Yes, hybrid architectures can use containers for long-running tasks and serverless for event-driven functions.

How do debugging and troubleshooting differ in serverless compared to containers?

Serverless is harder to debug due to ephemeral execution, while containers allow more control and persistent environments.

Is vendor lock-in a major concern when adopting serverless platforms?

Yes, serverless often relies on proprietary services, making migration more difficult than with containers.

What security considerations are unique to serverless deployments?

Focus on function permissions, secure APIs, event data handling, and least-privilege access.

.webp)

.webp)

.webp)