Today, modern businesses that are immersed in the ever-changing waters of the digital economy will find that digital transformation is not an option; it is the catalyst for growth and resiliency. Still, many businesses have yet to realize the promises of benefits. The difference between a stalled effort and a successful change lies with one essential function, and that function is data engineering.

At BuildNexTech, we recognize that true enterprise digital transformation is a deeply data-driven journey. Without a reliable, scalable, and high-quality foundation for your information assets, initiatives such as AI-driven digital transformation or attempts to improve the customer experience will fail to materialize. A strong data engineering architecture ultimately determines whether the transformation generates real business value.

✨ Key Insights from This Article:

🔧 Data engineering powers the core foundation of enterprise-wide digital transformation.

🏗️ Modern data architectures and pipelines enable scalable, future-ready systems.

📊 High-quality data drives insights, AI, automation, and smarter decisions.

⚙️ Data quality, integration, governance, and scalability are essential pillars.

🚀 BuildNexTech delivers end-to-end data engineering expertise to accelerate transformation.

The Role of Data Engineering as the Unsung Hero of Digital Transformation

Digital transformation demands rethinking how an organization works, deals with its clients, and produces revenue. It is everything from upgrading legacy systems to developing product capabilities utilizing analytics tools.

- Customer Experiences: Real-time analytics allows personalization of interactions and a deeper understanding of customer behavior.

- Operational Efficiency: Automation and data streamlining processes help optimize supply chains and enhance operational efficiency.

- New Revenue Streams: Quality big data is tangible fuel that powers data-driven digital enterprise solutions.

Many transformation efforts fail because the data architecture can't support the modern demand. Even substantial investments in machine learning or analytics will be wasted if the data is fragmented, of poor quality, or locked in data silos. A successful digital transformation initiative is based on strong data engineering to deal with data volume, variety, and velocity.

The Promise of Digital Transformation

An effective roadmap for digital transformation seeks to create a more agile, data-driven, customer-centric organization. That vision can be realized in a practical manner through more modern technology, including cloud solutions and data-driven architectures.

The vision includes:

- Flexibility and Assurance: The capacity to dynamically acclimate to shifts in marketplace conditions, using modern data engineering.

- Extensive Personalization: Capable of combining real-time data with customer experience to generate a more personal experience.

- Creativity: Quality data enables designers to iterate and move very quickly.

Digital transformation solutions rely on accessible, accurate data. Skilled Data engineers enable this by ensuring streamlined data pipelines, compliant systems, and reliable architectures.

Why Data Engineering Is Non-Negotiable Today

In a data-driven world, data is a company’s most valuable strategic asset. The difference between unused data and actionable insights is created by data engineering. It guarantees that the data is gathered, stored, transformed, and available for Data scientists, dashboards, and analytics.

A few requirements to consider:

- Dependable Data Pipelines: The analysis pipelines must support big data engineering scale.

- Data Integration: Data from ERPs, CRMs, SaaS tools, and legacy systems must be able to be integrated.

- Data Quality: Stringent Data Quality checks confirm that the outputs from analytics or AI projects are reliable and trusted outputs.

Forward-looking organizations treat their data infrastructure as a strategic investment and adopt cloud data engineering or external support from a data engineering services company to accelerate progress.

Understanding the Foundation: What Is Data Engineering in a Digital World?

Data engineering is responsible for developing and maintaining the infrastructure with which to collect, store, and process the massive quantity of data created. It is the layer on which the digital ecosystem is built. Amongst the important data engineering skills are: Python for data engineering, SQL for data engineering, and data engineering tools like Apache Spark, Amazon Redshift, and Snowflake.

What Digital Transformation Really Requires

Digital transformation often means not just a tool or technology but a rethinking of operations, workflows, and architecture. Enterprise-wide, we need coherent, flexible, and trusted data (where the accuracy and best data available) for teams from Data analysts to product managers.

- Coherent Data View: Includes data from CRMs, ERPs, and spreadsheets. Combined into a coherent view is very difficult to achieve for any organization.

- Scalability Architecture: Capable of supporting IoT, cloud platforms, and future capacity.

- Security & Governance: Compliance with regulations such as GDPR and HIPAA, down to Data Governance.

The Data engineering teams build out operational systems from these complex requirements that will support transformation.

The Core Mission of Data Engineering

The goal of data engineering is to make data consumable through well-designed data engineering ETL and ELT pipelines, where ETL transforms data before storage and ELT transforms it after storage for greater scalability.

The key responsibilities are:

- Data ingestion: Ingesting diverse data reliably using technology such as Apache Kafka.

- Data transformation: Cleaning, standardizing, and properly formatting data for analytics.

- Data storage: Choosing a proper data lake, data warehouse, Google BigQuery, or other storage layer.

For companies lacking internal skills, a data engineering consulting firm such as BuildNexTech provides the quickest access to sophisticated capabilities.

The Core Pillars: Essential Functions of Data Engineering

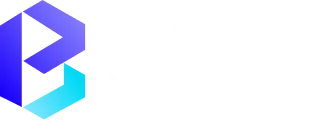

Building Scalable Data Infrastructure

A scalable data infrastructure that is scalable will accommodate the rapid growth of data and the adoption of new technologies. Important components include:

- Cloud Data Architectures to provide flexibility and scale.

- Data Lakes & Warehouses for uncurated and curated data.

- Infrastructure as a Code to allow for automated, consistent, and reliable deployment.

BuildNexTech works alongside organizations to realize Modern Data Architectures in line with long-term goals.

Designing Efficient Data Pipelines

Data pipelines are the automated channels used for data transport and preparation. Successful pipeline development means:

- Low Latency for processing data in real-time

- Resilience and monitoring that ensure systems stay stable during failures

- Security across all stages of the pipeline

Data engineers can use tools, such as Apache NiFi and Apache Flink, to orchestrate these flows.

Ensuring Data Quality & Integration

Data Quality and Data Integration define whether insights are trustworthy.

Practices include:

- Master Data Management to maintain authoritative records.

- Data Transformation to enforce validation.

- Metadata Management for transparency and lineage.

Enterprises using strong Data Quality Management create reliable foundations for digital transformation services.

Driving Business Value Through Data Engineering

Robust data engineering unlocks measurable business value by enabling accurate decisions and advanced capabilities.

Enabling Data-Driven Decision Making

To be able to make informed decisions, organizations must have accurate, timely, and easy access to data. Data engineering establishes a foundation to transform raw data into information that can create actions by:

- Timely Reporting: Providing dashboards and visualizations through forms of data tools such as Tableau or Power BI.

- Consistent Metrics: Applying standard definitions of data quality logic for analytics/reliability.

- Consolidated Views: Providing a single view of data through an integration of various operational systems.

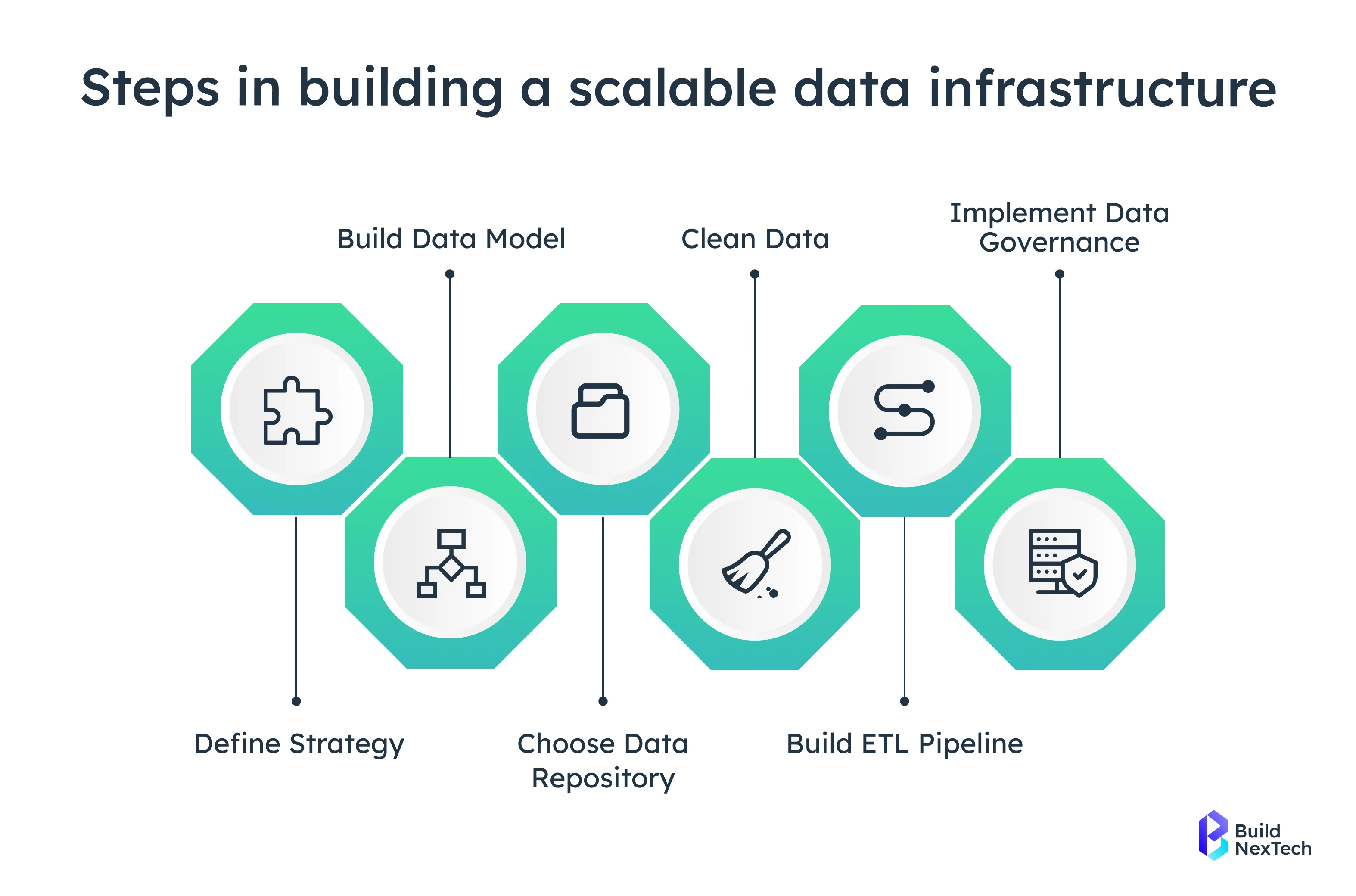

Powering AI, Analytics & Intelligent Automation

Modern artificial intelligence (AI), machine learning (ML), analytics platforms, and enterprise automation tools only yield valuable results if the data that drives them is clean, connected, and consistently trusted. Data engineering is the intelligence-based layer that prepares, formats, and transforms the data so complex systems can run accurately and efficiently.

Data engineering facilitates:

- AI Model Training through specially prepared datasets that are curated, labeled, and of high volume to improve accuracy.

- Predictive analytics combines historical trends and information with live streams of information so you can predict trends and business outcomes.

- Workflow automation to incorporate real-time inventory levels, instant routes, and personalized experiences for clients.

By outsourcing data engineering to BuildNexTech, you immediately obtain critical expertise, robust solutions, and an extensive elastic infrastructure that allows for even the most complex and demanding AI abilities.

Strategic Imperatives for a Future-Ready Data Engineering Capability

To develop an enterprise that is truly ready for the future, organizations will need to advance their data engineering foundation as data and AI demands grow. The implementation of modern architecture must be required for a scalable experience, and the tooling must empower teams to create intelligence from raw data. A strong data engineering strategy bridges business goals and technology.

Aligning Data Strategy With Business Goals

A data strategy only provides true value when it is in alignment with your organization’s top business priorities - whether that’s better insight into customer behavior, using sensor data to improve operational efficiencies, or using timely evidence to strengthen decision-making processes. The goal is to make sure that all data work directly relates to measurable business outcomes.

This involves:

- Goal-Oriented Modeling, which designs data around the specific questions business leaders want answered.

- Resource Prioritization, or focusing engineering resources to initiate projects with the highest potential outcomes, instead of fragmented analytics.

- Collaboration whereby data architects, business analysts, and business decision-makers can ensure alignment from design to implementation.

Leveraging Modern Architectures for Scalability & Agility

As the data ecosystem gets taller and more complex, modern data architectures give organizations the ability to scale new infrastructures and processes in minutes, respond to business needs, and introduce new technologies seamlessly into the business. They also support flexibility, resilience, and optimization of AI workloads across data engineering pipelines.

Modern data engineering takes advantage of progressive architectures such as:

- Cloud-Native Design, which allows for elastic scaling, pay-as-you-go economics, and bistable latencies.

- Data Mesh Architecture, which decentralizes ownership of data products so that domain teams can own, govern, and serve their own data products. Data Mesh is not a technology but an organizational and architectural paradigm.

- Serverless ETL (Extract, Transfer, Load), which increases the speed of development, automatically scales, and eliminates operational overhead by creating an entirely managed experience.

Organizations often accelerate this transformation by using a digital strategy consulting or outsourced data engineering delivery model, so they can leverage best-in-class architectures in their data engineering pipeline without long projects or delivery times.

Challenges & Best Practices in Data Engineering

Building a strong data foundation isn’t easy. Companies face several obstacles that slow down progress, complicate analytics, and weaken digital transformation outcomes.

Challenges include:

- Data Silos: Fragmented data sources delay integrations and make unified insights difficult.

- Talent Gaps: Skilled engineers capable of working with modern platforms remain limited.

- Change Management: Ensuring teams correctly adopt new systems and workflows is often unpredictable.

Best practices:

- Automation: Reduces manual errors and boosts pipeline efficiency.

- Data Governance: Reinforces security, compliance, and quality across the data lifecycle.

- Standardized Toolkits: Consistent stacks—such as Python, cloud-native platforms, and modular ETL frameworks—support scalable development.

By anticipating these barriers and applying proven methods from the start, companies create resilient, scalable data environments that inspire trust and enable confident decision-making throughout their digital transformation journey.

The Future Trajectory of Data Engineering

Growing Demands From AI & Real-Time Data

Contemporary AI systems rely on processing real-time data for accurate predictions, personalized experiences, and independent decision-making. Due to organizations weighing up new AI capabilities, their data engineering efforts must adapt to accommodate these next-generation needs. Areas of emphasis in these next-generation data engineering efforts include:

Future priorities include:

- Streaming architectures designed with Apache Kafka offer data ingestion and event-driven intelligence in real-time.

- Feature engineering to create AI-ready datasets to allow for greater model accuracy and context.

- Operationalizing models for production-grade reliability, monitoring, and continuous enhancement.

- Real-time data quality controls to mitigate model drift and confidence in AI.

- Scalable storage and compute to cater to anticipated data bursts from sensors, applications, and user behavior.

A data engineering consulting company like BuildNexTech helps companies shift to these advanced capabilities.

Case Example 1: Retail Enterprise Unlocks Real-Time Inventory Visibility

A large retail enterprise struggled with stockouts, delayed replenishment, and siloed legacy systems. BuildNexTech redesigned its data infrastructure using cloud data engineering and created real-time data pipelines across POS systems, warehouses, and ERP platforms.

Results achieved:

- Significant reduction in inventory mismatches

- Real-time visibility across all retail locations

- Improved demand forecasting accuracy

- Automated replenishment workflows that reduced operational overhead

This transformation was only possible because the retailer finally had unified, governed, and real-time data powering business decisions.

Conclusion: Data Engineering as the Backbone of Digital Transformation

Digital transformation aims to achieve agility and intelligence for enhanced experiences everywhere. However, without good data engineering, you are fantasizing. BuildNexTech provides all of the data platform engineering, pipelines, and architecture to convert that vision into reality. A strong data foundation is the mechanism that moves organizations from strategy and ambition to tangible, operational results.

Whether that includes building pipelines, modernizing legacy systems, or scaling a digital transformation consulting model, we can offer your data engineering team the experience and confidence needed to have a dependable and scalable foundation that can support your transformational roadmap. With unified, high-quality data at the core of your ecosystem, every initiative—be it analytics, visualization, or automation—will deliver sustainable business value, rather than isolated improvement.

These are not fantasies—organizations are seeing the real benefits of modern data engineering, across all industries, every day. Companies that invest early in their data infrastructure and data engineering see increased delivery speed, better customer experiences, and a status of "always future-ready" in an AI-driven world. Data engineering is no longer a possible agenda item—it is the backbone of sustainable digital transformation approaches.

People Also Ask

How do data engineers ensure cost optimization in cloud-based data systems?

By monitoring storage/compute usage and applying auto-scaling, tiered storage, and serverless ETL models to prevent over-provisioning.

What’s the average timeline to implement a modern enterprise-grade data engineering platform?

Most enterprises take 3–9 months depending on legacy complexity, number of source systems, and level of real-time processing.

Can low-code platforms reduce the need for data engineering teams?

Low-code tools accelerate development but cannot replace data engineers for scalability, governance, and enterprise-wide orchestration.

What metrics can organizations track to measure the ROI of data engineering?

KPIs include reduced reporting time, lower operational cost, improved forecast accuracy, and increased automation efficiency.

.webp)

.webp)

.webp)