In 2026, data engineering is integrated into enterprise innovation. Companies at the forefront focus on combining big data platforms, data pipelines and cloud computing to realise actionable knowledge. They use these to provide useful insights while understanding customer needs.

BuildNxtTech (BNXT.ai) helps organizations achieve this by providing real-time analytics and scalable data pipelines, enabling smarter and faster decision-making. As enterprises invest in modern data architectures and cloud solutions, BNXT.ai stands out by delivering tools that make complex data manageable, reliable, and ready for meaningful analysis.

What’s next? Keep scrolling to explore:

🚀 How Data Engineering Drives Enterprise Innovation & AI-Driven Analytics

🚀 Redefining Analytics with Data Pipelines, Lakehouses & Version Control

🚀 Tools, Platforms, and Cloud Solutions Empowering Modern Data Teams

🚀 Future Trends: Hybrid Cloud, Generative AI & Advanced Data Pipelines

🚀 Choosing Top Data Science Services Companies & Preparing for 2026

How Top Data Science Services Companies Are Shaping the AI Era

In an environment that is ever-evolving, where the relay of information happens more and more quickly, today's data scientists are collaborating with reputable data science service firms to help accurately obtain actionable intelligence from analysis of multifaceted and diverse data-related projects. Data science service providers have a fundamental role in helping organizations facilitate the use and accessibility of trusted data, and, when used correctly, prudently facilitate the delivery of accurate, timely, and scalable insights. Some of the functions performed by the data science service provider's efforts consist of:

- Building Machine Learning Models: Design and implementation of sophisticated machine learning models engineered to identify patterns, predict trends, and provide actionable insights from large data sets to support data-driven decision-making.

- Automating Data Products: Automating repetitive data processes and data product workflows accelerates all means of operations while reducing human error, while providing business entities the opportunity to engage in work that that grow revenue or enhance strategy.

- Delivering High Quality Data: Top data science service providers put in place strict data quality frameworks that validate, cleanse, and keep a reliable data set that ensures that analytics output, or AI models, perform properly, whilst maintaining the purpose of the analysis.

- Good Data Management Practices: Data science service providers establish good data governance and data management practices, including data storage, version control, and data documentation, serving organizations in ensuring compliance, security, and long-term scalability.

These capabilities allow data science service companies not only to support data scientists, but also to empower enterprises to utilize AI more effectively; changing raw data into strategic business insights that drive innovation and growth.

How Data Science Services Companies Are Redefining Analytics

The integration of data pipelines, data lakehouse companies, and cloud data systems is transforming the way data analysis operates.These companies provide data analytics services that enable timely insights from complex datasets. Modern data engineering teams leverage data catalogues, data migration, and data modelling techniques to ensure seamless data integration across multiple platforms.

Innovative companies further enhance this process by using data versioning and data Version Control tools, which help track changes and keep datasets reliable for predicting future trends. These combined practices empower data analysts to deliver timely insights while strengthening distributed computing and big data analytics initiatives.

Data Engineering: Building the Core of Modern Enterprises

Data engineering forms the base of enterprise digital transformation. Businesses can manage large data sources well by designing a scalable data lake architecture. They also implement data plumbing solutions and scalable data lake services. Main functions include:

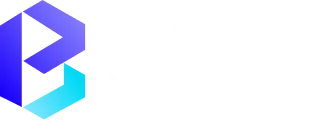

- Data pipelines and ETL (extract, transform, load) processes allow for the quick and easy sourcing, transforming, and loading of data from a variety of raw data sources.

- Cloud and data warehouse tiers: These are cloud-based storage layers for both structured data (databases, spreadsheets) and unstructured data (images, videos, emails). They allow secure, scalable storage and easy access for analysis. This setup supports real-time insights and business intelligence.

- Data governance and compliance: Trust in how data is utilised and protection of data arise from the fundamentals of Data Governance and data security policies.

Data engineers play a key role in this system. They support data analysts, data scientists, and data science teams by keeping a strong data foundation technology.

Tools and Platforms Shaping Modern Data Engineering

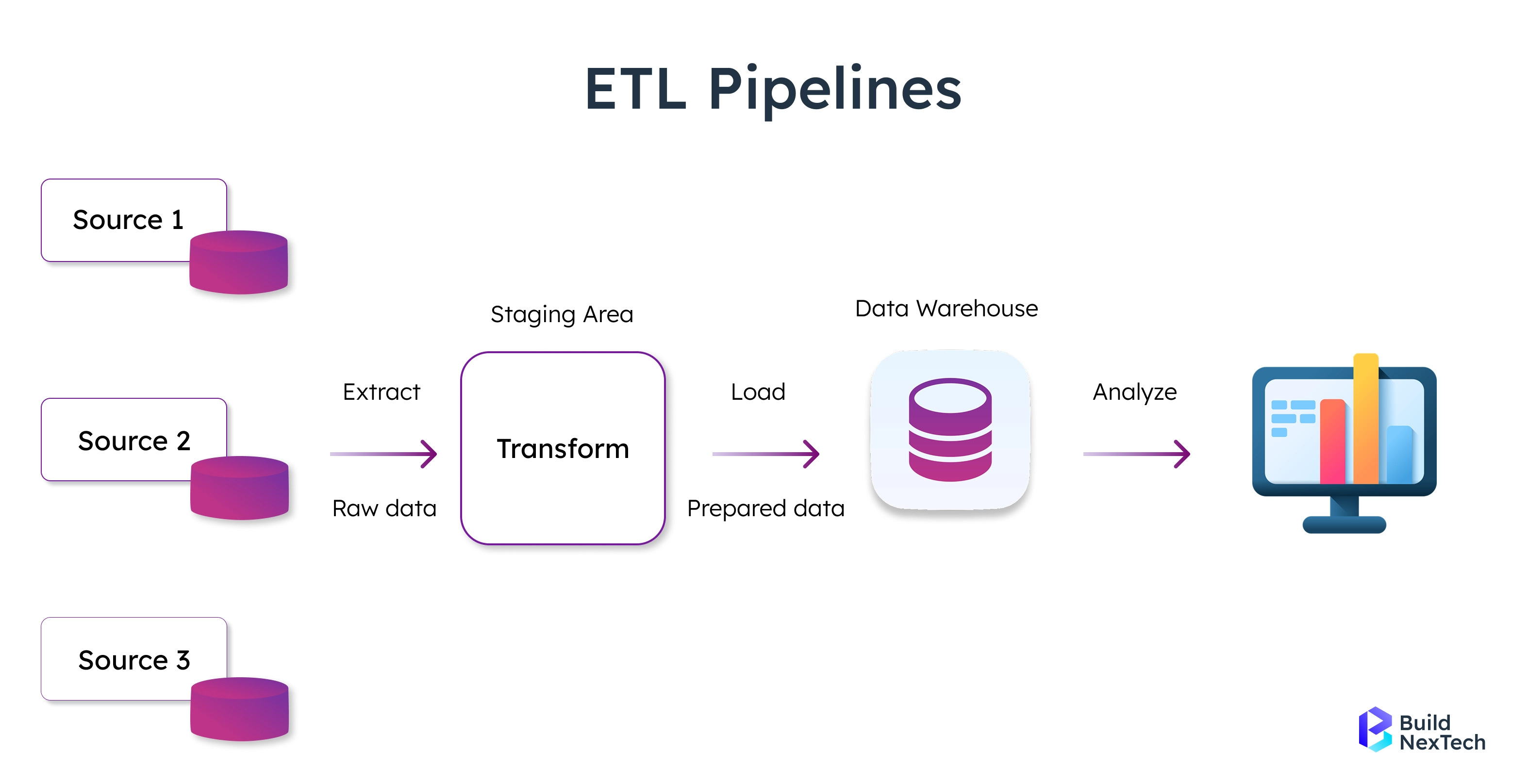

Modern data engineers focus on mastering platforms like Snowflake, Hevo Data, Apache Spark, Apache Airflow, dbt, Apache Flink, Kafka Streams, and other cloud-native solutions. These tools help:

- Track data versions and manage changes effectively.

- Fix data integration problems and ensure smooth ETL processes.

- Organize data catalogues for easier discovery and collaboration.

- Maintain high data quality through validation, profiling, and governance tools like Great Expectations.

- Speed up data analysis with efficient batch and stream processing frameworks.

Technologies like data mesh architectures, cloud data systems, data lakes, and lakehouses offer flexibility and scalability, strengthening data pipelines and making them more efficient. Modern companies use data visualization services to transform processed data into interactive dashboards for faster decision-making.

Future Trends: Generative AI and Advanced Analytics Pipelines

New technologies like generative AI and advanced analytics pipelines are shaping the future. Enterprises use scalable data lake architecture, data mesh architectures, and cloud data stacks. A practical example of integrating cloud data stacks with real-time analytics is showcased in BuildNexTech’s financial dashboard case study. These support distributed computing and AI workloads. Data sharing frameworks, data catalogues, and strong data security rules are very important. They help maintain trust and compliance.

Top Data Engineering Companies Driving Innovation in 2026

In 2026, data engineering companies will lead innovation, helping organisations turn raw data into actionable insights for smarter decisions. Growing demand for real-time analytics, scalable cloud solutions, and AI systems makes strong data management critical. Top organisations rely on modern data cloud services to store, process, and scale data efficiently, leveraging disruptive technologies to transform information into business value.

Key Data Management Solutions:

- Advanced Data Pipelines: Real-time and ETL pipelines for seamless data aggregation and analysis.

- Cloud Data Stacks: Scalable infrastructure for diverse data types.

- Data Mesh Architectures: Decentralized models for governance and integration.

- AI-Enabled Analytics Platforms: Machine learning-powered predictive and prescriptive insights.

- Data Quality Management (DQM): Tools and data compliance services to ensure accuracy, security, and regulatory adherence.

Global Leaders that are re-inventing the data ecosystem:

- Accenture: Utilizes AI, advanced data pipelines, cloud databases, and visualizations. Transforms complex datasets into actionable business intelligence. Enables real-time and historical data processing for quick decision-making.

- LTIMindtree: Industry leader in cloud infrastructure and data integration. Streamlines large-scale data operations and hybrid architectures. Ensures high-quality, consolidated data management for enterprises.

- ScienceSoft: Specializes in big data engineering and foundational technologies. Builds scalable ETL pipelines and secure cloud platforms. Helps organizations fully leverage their data assets.

- Simform: Delivers end-to-end ETL pipeline and real-time data streaming solutions. Ensures continuous, reliable data flow for AI and analytics. Drives predictive insights and operational efficiency.

- XenonStack: Excels in data mesh architectures and scalable cloud stacks. Enables decentralized governance with strong compliance. Supports agile and collaborative enterprise data environments.

- BNXT: Emerging leader in cloud-native data pipelines and real-time analytics. Combines scalability, security, and AI-driven insights. Empowers businesses to turn raw data into actionable intelligence.

What Sets the Top Data Engineering Companies Apart

Successful companies differentiate themselves by doing the following:

- Skilled Data Engineering Teams: Skilled in data pipelines, data integration, and data quality.

- Cloud & Big Data Solutions: Knowledgeable in cloud computing, big data platforms, and data warehouses.

- Data Governance & Compliance: Achieving Data Governance policies and data security compliance.

- Incorporating AI and Analytics: Available for machine learning, predictive analytics, and data products.

Choosing a Data Engineering Services Company: Expert Tips for 2026

Suggestions for finding the right organisation:

- Technical Ability: Must be proficient in data pipelines, cloud data infrastructure, ETL processes, and data integration.

- Experience and Case Studies: Review their data engineering team and past projects to assess expertise.

- Scalable Solutions: Should handle big data engineering services, data lakes, and data warehouses efficiently.

- Security and Compliance: Ensure adherence to data security policies and Data Governance compliance.

- Integration Capabilities: Ability to integrate multiple data sources seamlessly across the organization.

Why Working with the Right Data Engineering Partner is Important

The partner ensures data engineers work efficiently with internal teams while maintaining high-quality data pipelines. They provide strategic guidance on data management, supporting analytics, ETL processes, and scalable cloud data architectures. This ensures reliable, actionable data for faster business decisions.

The AI Foundation: Data Engineering’s Pivotal Role in 2026

Advanced AI data analytics companies rely on strong data pipelines and cutting-edge AI development services, including generative AI development services and AI software development services. They also depend on data integration services. Intelligent Data Engineering ensures machine learning and AI models get high-quality datasets. It also preprocesses these datasets. Data engineers and data scientists work together to turn raw data into useful insights. This helps support data management projects across the company using the cloud.

The Future of Data Engineering in 2026 and Beyond

Emerging trends transforming the data landscape include:

- Hybrid cloud data services are scalable and flexible solutions that integrate both the private and public cloud infrastructures.

- Pipelines and Generative AI models are powerful predictive forecasts and enhanced insights, which are AI-driven models.

- Data pipelines and automated ETL processes offer simplified processes of smooth data extraction, transformation, and loading.

- Scalable data lakes and cloud infrastructure are powerful structures that are capable of handling large amounts of various data.

- Effective data governance and data security practices will be sufficient in ensuring data protection, accuracy and compliance across enterprises.

- Organisations embracing these innovations will be future leaders in data due to the ability to use intelligent data engineering to make decisions faster, more accurately, and strategically.

Conclusion

Data engineering services are the backbone of modern enterprises, enabling organizations to turn raw, complex data into actionable insights. Throughout this blog, we explored how advanced data pipelines, cloud data stacks, data mesh architectures, and AI-enabled analytics platforms are driving innovation in 2026. We highlighted the role of top data engineering companies—from Accenture and LTIMindtree to ScienceSoft, Simform, XenonStack, and BNXT—in redefining data management with scalable, secure, and high-performing solutions.

Choosing the right data engineering partner is crucial for building robust data pipelines, ensuring data quality, maintaining compliance, and integrating diverse data sources. These partners empower enterprises to leverage real-time analytics, optimize cloud infrastructure, and support AI-driven decision-making. By adopting modern data engineering strategies and collaborating with industry leaders, organizations can stay ahead in the era of big data, cloud computing, and AI, unlocking growth, efficiency, and competitive advantage.

People Also Ask

What differentiates data engineering from data science?

While data engineering is more concerned with building and developing the data flows - and underlying data infrastructure - data science is focused on using that data and providing insights.

What is the cost of data engineering for organisations?

Prices and costs, depending on the scope of work, tech stack, and company size and calibre, vary widely.

Which industries are leading the investment in data engineering in 2026, and why are they focusing on enhancing their data infrastructure?

The primary investing industries are healthcare, financial services and retail.

How can small and medium-sized businesses leverage the expertise and solutions of large data engineering companies to access advanced analytics and scale efficiently?

Scalability services will make small businesses customers of advanced analytics.

.webp)

.webp)

.webp)