In 2026, Data Engineering has become the backbone of global innovation. Businesses across every industry rely on intelligent data pipelines, scalable Data Infrastructure, secure cloud platforms, and AI-powered analytics platforms to extract value from information and accelerate digital transformation. As Machine Learning, Generative AI, large language models (LLMs), AI models, and artificial intelligence ecosystems mature, the ability to integrate real-time data, automate ETL/ELT pipelines, build modern data warehouses, and manage complex data lakes has become a key differentiator.

Yet, building elite internal data teams is costly and complex. Global talent shortages, evolving software engineering practices, rising cybersecurity expectations, and compliance standards like GDPR, HIPAA, NDAs, and differential privacy demand a new approach. As a result, enterprises increasingly choose data outsourcing services and IT Outsourcing partners who provide end-to-end execution capabilities, custom software, software development outsourcing, QA testing, UI/UX design, mobile applications, and scalable analytics solutions backed by infrastructure specialists and certified DevOps and MLOps teams.

Strategic leaders now treat outsourced expertise not as cost-cutting, but as a catalyst for AI development, customer service automation, workflow automation, real-time analytics, predictive analytics, fraud detection, sustainability, net-zero initiatives, and secure Data Security programs. Top AI outsourcing firms offer consulting, data integration, data governance, data quality frameworks, advanced observability tooling, end-to-end column-level lineage, and ongoing platform maintenance to ensure trust and transparency.

The Importance of Data Engineering in 2026

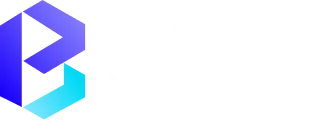

Modern data infrastructure enables data scientists, ML engineers, and AI teams to operationalise massive Big Data volumes using ETL pipelines, streaming log data, Apache Kafka, and event-driven architectures. Without reliable systems for managing real-time data pipelines, pipeline orchestration, and data lake architectures, companies cannot power chatbots, virtual assistants, AI copilot tools, AI-augmented pipelines, or enterprise software solutions.

High-performing organisations invest heavily in data governance, secure cloud solutions, and modern data stack frameworks built on Snowflake, Databricks, Redshift, Postgres, and hybrid cloud environments. They implement modern data architecture cloud systems and Cloud Data Engineering workflows to unlock scalability and automation at every layer of the Data Platform. They also leverage cloud-centred data engineering and cloud data pipelines to scale efficiently.

Enterprises with resilient data foundations enable fast decision-making, strategic customer acquisition, intelligent content generation, and generative content workflows across sales, product, marketing, and customer service operations. Their Data Engineering Services teams support AI-native tools, AI-driven tools, AI software, and AI-augmented experiences from Computer Vision pipelines to NLP solutions for call centers and chatbots.

Understanding Data Engineering

At its core, Data Engineering includes designing and maintaining data pipelines, data-warehousing, data lakes, Information Technology Outsourcing (IT Outsourcing) architectures, colocation, secure Data Centre operations, advanced cooling, data entry flows, and multi-layer data governance.

It ensures data is usable across operational systems, cloud data platforms, analytics platforms, and blockchain intelligence data platform environments for fraud analytics, financial oversight, and Blockchain data security.

Data engineers build scalable data integration systems using modern storage (data lake, data lakes, data warehouse) and compute platforms (Databricks, Snowflake, Redshift) to enable seamless AI development, LLM fine-tuning, AI/ML development, AI software, MLOps lifecycle orchestration, and data engineering in cloud environments in a secure and governed way.

The Shift Towards Outsourcing Data Engineering Tasks

Top organisations outsource to specialised data engineering service provider partners who manage outsourcing infrastructure build, partner data pipeline build projects, and outsource data management functions as part of a global Cloud Data Engineering strategy. These external partners operate as an external data engineering provider layer for enterprises.

They unlock:

- Faster time to market

- Access to cloud-centred data engineering skills

- Full-cycle software engineering + custom software delivery

- Client satisfaction through predictable execution

- Access to the United States, EU, APAC, and Y Combinator-backed engineering talent

- Strong IT consulting support

Emerging partners like SuperStaff and next-generation platforms delivering data annotation engine pipelines and AI-augmented pipelines fuel automation at scale.

Impact on Big Data & Analytics

Best-in-class teams handle large data volumes, build massive data lakes, and operate cloud data platforms powering real-time analytics, predictive analytics, Generative AI, AI-driven tools, and enterprise AI + Robotics innovation.

They optimize ETL/ELT, ELT process, streaming systems (Apache Kafka), and edge computing, enabling sustainable operations, smart energy grids for renewable energy, and deep-learning-driven Computer Vision systems in healthcare imaging and robotics. These efforts reflect big data development maturity that fuels big data engineering services.

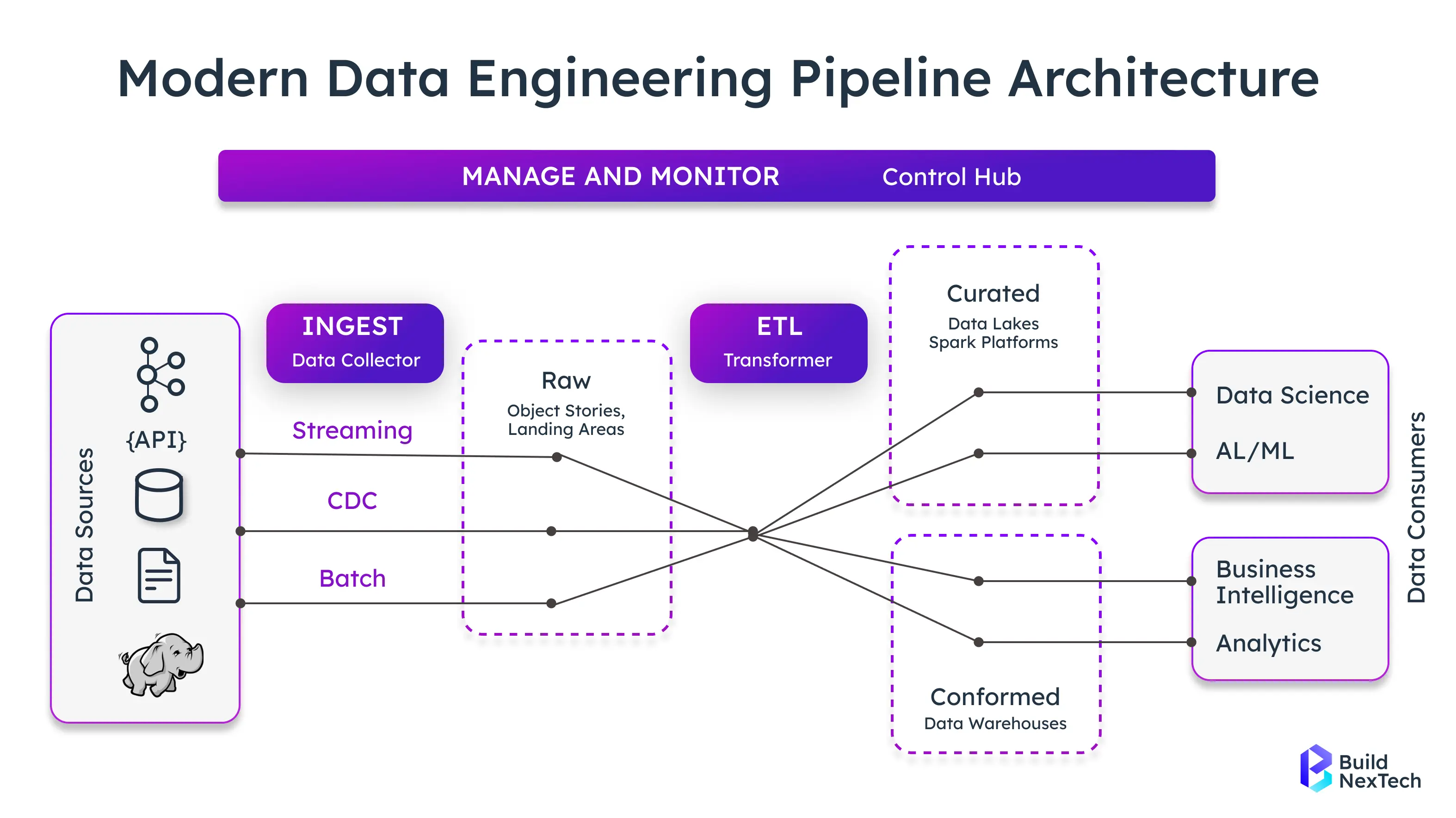

Key Players in the Data Engineering Outsourcing Market

The 2026 outsourcing landscape is mature, featuring industry giants and niche innovators in Data Engineering Services. These firms deliver end-to-end data engineering services, data engineering consulting, and scalable data engineering services, acting as strategic co-creation partners rather than generic offshore vendors.

Leading Data Engineering Providers

Top data engineering providers in 2026 deliver scalable data engineering services, secure cloud environments, real-time intelligence, and AI models + copilots integrated into enterprise pipelines. They provide end-to-end data engineering, full-cycle development, UI/UX, QA testing, and mobile app development, while ensuring strong NDAs, GDPR/HIPAA compliance, and infrastructure and colocation expertise. These firms leverage GPT-4 Turbo, DALL-E 3, Sora, and cloud-native data engineering services to power automated pipelines, generative content, multimodal systems, and self-optimising enterprise platforms.

Notable Data Engineering Leaders in 2026:

Accenture – enterprise data platforms & secure cloud delivery

Databricks – lakehouse & MLOps/LLM pipelines

InData Labs – AI-driven data engineering services

EPAM – automation & full-cycle development

SoftServe – multi-cloud data engineering & observability

BuildNexTech – cloud-native data engineering, AI copilots, QA, UI/UX

Slalom – real-time analytics & enterprise cloud migration

Primary Solutions & Capabilities Offered::

- scalable data engineering services

- Secure cloud environments

- Real-time intelligence

- AI models + AI copilot assistants

- end-to-end data engineering services

- full-cycle development and UI/UX design

- QA testing, recruiting, and mobile applications

- Strong NDAs, compliance (GDPR, HIPAA)

- Infrastructure specialists & colocation expertise

Emerging Companies to Watch

A new wave of agile providers, including several Y Combinator–backed AI outsourcing companies, is transforming modern Data Outsourcing Services. These firms specialise in AI-augmented pipelines, AI-driven tools, intelligent virtual assistants, automated data quality workflows, and LLM fine-tuning designed to accelerate enterprise AI deployment and data engineering in cloud environments.

Focused on automation-first and streaming-first design, they bring advanced data observability platform capabilities, real-time data pipelines, and scalable architectures that support high-growth sectors. Their solutions power property investors, high-velocity rental GMV platforms, and secure blockchain intelligence data platform use cases, making them strategic partners for companies seeking innovative, cloud-native, and cost-efficient data solutions.

Industry-Specific Data Engineering Solutions

Emerging companies are reshaping industry data workflows with AI-augmented pipelines, AI-driven tools, and LLM fine-tuning tailored to mission-critical sectors.

Modern outsourced engineering partners build AI-enhanced data observability platform tooling that supports:

- Property intelligence & PropTech (rental valuation, market intelligence, rental GMV prediction)

- Blockchain intelligence data platform ecosystems (fraud insights, on-chain analytics, AML/KYC monitoring)

- Real-time operational dashboards for supply chain & fintech

- Knowledge automation & virtual assistants for enterprise workflows

Many disruptive startups - including Y Combinator-backed AI companies — are accelerating innovation by blending traditional data engineering with scalable foundation model integration and smart agent workflows.

Cloud Data Engineering Trends

Cloud Data Engineering Trends are reshaping how enterprises build and scale intelligence systems. Organisations are moving toward cloud-centred architectures to support real-time analytics, AI workloads, and global data access, with platforms like Snowflake, Databricks, BigQuery, Redshift, and PostgreSQL driving modern adoption. The shift includes serverless compute, containerized MLOps, and lakehouse + data mesh models, ensuring flexible, scalable, and cost-optimised data delivery. With zero-trust security, automated governance, FinOps-aligned optimisation, and end-to-end observability, cloud-native pipelines now power mission-critical operations. As AI-augmented pipelines and autonomous orchestration mature, cloud data engineering becomes the backbone for self-healing systems, automated quality checks, and intelligent workload distribution, setting the stage for resilient, future-ready data platforms.

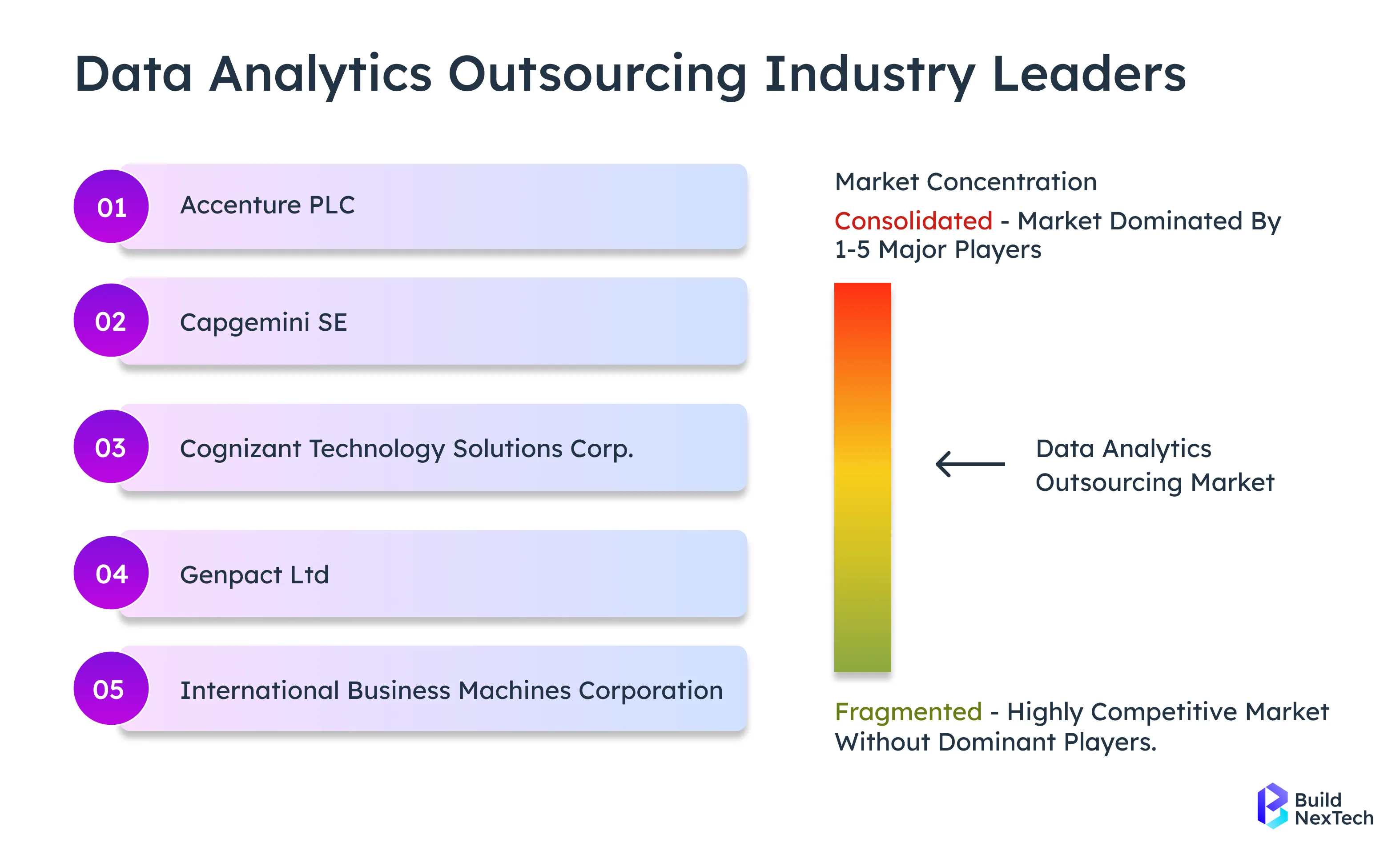

Top Data Engineering Trends 2025

The latest trends in data engineering for 2025 underline the increasing importance of scalable, agile, and innovative data management strategies. Understanding and embracing these trends will be crucial for organizations aiming to stay competitive in an increasingly data-centric business landscape, shaping the future of data engineering and its applications across industries.

The Rise of Cloud-Centered Data Engineering

The shift to cloud-centered data engineering continues to accelerate in 2026.

Modern delivery models prioritize:

- Serverless compute for auto-scaling workloads

- Containerized MLOps and Kubernetes-first engineering

- Lakehouse + mesh data platform patterns replacing legacy systems

- Multi-cloud security, cybersecurity, and zero-trust frameworks

Cloud data engineering firms specialize in data engineering in cloud environments at scale, enabling resilient digital infrastructure, hybrid deployments, colocation strategies, and data center modernization.

Platforms such as Databricks, Snowflake, Redshift, and Postgres anchor enterprise-grade pipelines, powering global analytics and real-time AI systems.

Modern Data Architecture in the Cloud

Modern companies architect platforms designed for:

- Automated data discovery & governance

- Column-level lineage for audit & compliance

- Unified data catalogs & observability

- High-availability streaming (Kafka, Flink, Spark Streaming)

- End-to-end MLOps integration

These cloud-native data engineering services ensure consistency across ingestion, transformation, monitoring, and delivery layers — aligned with enterprise SLAs and regulatory frameworks.

Challenges and Solutions in Cloud Data Engineering

Innovations Driving the Future of Data Engineering

Innovation in data engineering is accelerating as enterprises adopt AI-augmented pipelines, autonomous orchestration, and real-time analytics frameworks to handle scale and complexity. The future centers on automated data quality, intelligent pipeline monitoring, semantic data discovery, and foundation-model–powered engineering assistants that streamline development and operations. Modern architectures blend lakehouse, data mesh, and edge-to-cloud computing, ensuring fast, secure, and compliant data flow across global systems. With MLOps maturity, FinOps efficiency, and zero-trust governance, platforms evolve into self-optimising, self-healing, and compliance-aware systems capable of powering real-time decisioning. As AI-native engineering becomes standard, organisations gain the ability to unlock predictive intelligence, accelerate digital transformation, and drive data-driven innovation at enterprise scale.

Automation and Pipeline Orchestration

Automation & pipeline orchestration now sit at the core of sustainable data strategy.

Leading firms deliver:

- End-to-end automated data pipelines

- DevOps + MLOps integration

- Observability platform tooling

- Autonomous monitoring & self-healing systems

- Automated compliance workflows (HIPAA, GDPR, SOC2, PCI)

This enables scalable, error-free end-to-end data engineering services while supporting real-time intelligence and governance.

Scalability in Data Engineering Services

The next wave of scale combines:

- AI outsourcing companies

- AI-augmented pipelines & autonomous agents

- Multimodal AI + robotic

- Streaming analytics powering operational AI

- Distributed computing for global enterprise workloads

Systems are evolving to self-heal, self-optimize, and manage performance dynamically - supporting net-zero targets and sustainable digital operations.

The Future of Big Data Engineering Services

Future-ready enterprises will use:

- Foundation model-driven data assistive intelligence

- Automated data discovery & quality scoring

- Semantic & graph-based data platforms

- Hybrid edge + cloud analytics

- Real-time decisioning at a global scale

Companies that select data engineering partners based on AI-native tooling, digital transformation depth, and live data intelligence capability will lead the market.

Conclusion

In 2026, outsourcing evolves from cost efficiency into a strategic innovation lever. The winners will be organisations partnering with elite data engineering consulting firms, AI-driven outsourcing companies, and cloud data engineering experts capable of delivering:

- AI-augmented pipelines

- Future-proof scalability

- Compliance excellence (GDPR, HIPAA, SOC2)

- Enterprise-grade observability & security

- Real-time intelligence & automation

Those who invest in AI-native, cloud-first outsourced data engineering teams will move faster than competitors - executing digital transformation and unlocking intelligence that defines tomorrow’s market leaders.

People Also Ask

What is the primary advantage of outsourcing data engineering for early-stage companies?

Outsourcing gives startups access to top-tier engineers and proven modern data platforms without hiring overhead. This accelerates data readiness, AI adoption, and product delivery with lower risk and predictable cost.

How does outsourced data engineering support AI product development?

Expert teams build high-quality data pipelines that ensure clean, real-time data for training and inference. This foundation speeds up model deployment and improves AI accuracy across enterprise workflows.

Is outsourcing data engineering secure for industries like fintech and healthcare?

Yes, trusted partners follow SOC2, ISO 27001, HIPAA, and GDPR-aligned frameworks for secure delivery. Data encryption, access control, DevSecOps, and audit logs ensure compliant environments.

How long does it typically take to see ROI from outsourced data engineering?

Most organizations start seeing clear value within 3-6 months through automation, data reliability, and faster insights. ROI grows as pipelines scale, cloud waste reduces, and AI-driven analytics unlock operational efficiency.

.webp)

.webp)

.webp)