In the last ten years, computer vision has established itself as a critical part of artificial intelligence, advancing the fields of image recognition, object detection, reinforcement learning, and Generative AI. The power of computer vision to capture, analyze, and understand visual data is now being broadly used in industry, research labs, and private companies to improve automation, decision-making, and user experience.

A key factor in any computer vision project’s success is selecting the right deep learning framework. Among the many Deep Learning Frameworks, PyTorch and TensorFlow stand out as the most trusted by research engineers, developers, and machine learning professionals for building deep neural networks, convolutional neural networks, recurrent neural networks, and generative models. These frameworks are frequently utilized by professional teams and AI solution providers like Bnxt.ai, which specialize in Python development, AI integration, and computer vision applications. Their expertise helps enterprises build scalable deep learning models using frameworks such as PyTorch and TensorFlow.

This article will give an overview of PyTorch and TensorFlow. It has examples about installation and practical tips. It shows performance comparisons and machine learning pipelines. It covers computer vision applications and how to deploy them in production.

✨ Key Insights from This Article:

🖼️ Overview of computer vision and its growing impact in AI and industry

⚡ Comparison of PyTorch and TensorFlow for research, production, and deployment

💻 How PyTorch features like Dynamic Computation Graphs and CUDA accelerate model training

📱 Advantages of TensorFlow Lite and TensorFlow Serving for mobile and cloud deployment

🚀 Best practices for installing, using, and deploying deep learning frameworks

🎯 Practical tips for choosing the right framework based on project goals and use cases

Understanding Computer Vision

What is Computer Vision?

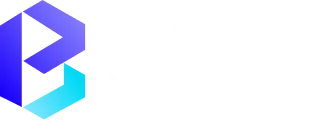

Computer vision is a field of artificial intelligence that allows machines to perceive and interpret visual information from the world. Computer vision helps machines recognise stimuli and classify objects. It also helps machines find important information in images and videos. These processes work in a way that mimics how humans see. At the time, computer vision mostly used rule-based algorithms and feature extraction techniques. Today, deep learning models, neural networks, and GPU acceleration help machines. They reach superhuman accuracy in object tracking, image classification, and video analysis.

What is the Process of Computer Vision?

Current practices in computer vision and computer vision deep learning are very heavily reliant on deep learning (CNNs and deep neural networks specifically). The general workflow involves:

- DataSet: Datasets such as ImageNet, CIFAR, and Imagenette are popular datasets for training models. The nice thing is that there are some libraries, such as OpenCV and various Keras preprocessing layers that can help pre-process those images for a neural network.

- Neural Network Architecture: This is the step of designing our own Artificial Neural Networks, the model can also build off pretrained models like ResNet50, InceptionV3, YOLOv8, Faster R-CNN or Mask R-CNN for a specific goal.

Training and optimisation use optimisers like Adam. Data augmentation happens through Batch Normalisation. Distributed training on GPUs or TPUs improves GPU use. These are common methods.

Deployment: Integrate models into cloud-based platforms such as Google Cloud, deploying models to production with either Docker, FastAPI, TorchServe, or TensorFlow Serving.

Techniques such as PyTorch's Eager Execution or Dynamic Computational Graph allow us to do more both in terms of using our models and operating much quickly. TensorFlow offers Static Computational Graphs for similar reasons.

Most Popular Computer Vision Applications You Should Know

- Healthcare: AI-enabled diagnostics that help identify anomalies in X-rays or MRIs with its use of object detection models.

- Automotive: Autonomous driving applications rely on the need for image recognition and object detection.

- Retail: Inventory tracking and assessing consumer behaviour, as well as automating checkouts. Facial recognition models and surveillance systems analyse data in real time. They help with situational awareness and real-time monitoring.

According to Fortune Business Insights, the computer vision market will grow tremendously into a $17 billion industry. AI-optimised solutions will become more commonplace in operational work.

An Introduction to PyTorch

What is PyTorch? How It Powers Computer Vision and NLP

PyTorch is an open-source deep learning framework developed by Meta AI, formerly known as Facebook AI Research Lab (FAIR). It is one of the most widely used frameworks in research. It is popular due to its design and features such as Dynamic Computation Graphs, Eager Execution, and native Python programming language compatibility. Its key features include:

- Dynamic Computational Graphs: Models can change based on the operations when training.

- AutoGrad: Simplicity in computing gradients of deep learning models.

- GPU Support (CUDA): Fast computation for deep neural networks.

- PyTorch Lightning: A higher-level interface for scalable distributed computing, building, and training.

There are also several high-level packages available: TorchVision, TorchText, TorchElastic, and TorchServe for computer vision, natural language processing, distributed training, and deploying to production respectively.

Installing PyTorch for development can be done using pip or conda - either CPU only or GPU accelerated (CUDA).

- CPU only install

- pip install torch torchvision torchaudio

- pip install torch torchvision torchaudio

- GPU (CUDA) install or PyTorch CUDA

- pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

- pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

- PyTorch Lightning provides an easier way to build and train models.

- pip install pytorch-lightning

PyTorch in Action

PyTorch has powered many state-of-the-art computer vision and AI projects — from YOLOv7 for real-time object detection to Generative Adversarial Networks (GANs) used in Generative AI applications. Major companies like Tesla, Meta (Facebook AI Research), and OpenAI have leveraged PyTorch to build deep learning models for autonomous systems, image synthesis, and large-scale AI experiments. This makes PyTorch a preferred choice for organizations and Python development agencies seeking to build advanced computer vision systems.

With strong community support and thousands of active PyTorch GitHub repositories, developers have access to a rich ecosystem of pretrained models and reusable scripts. For instance, engineers can quickly implement architectures such as ResNet or MobileNet on datasets like MNIST, CIFAR-10, or ImageNet to create image recognition pipelines. Thanks to seamless integrations with Hugging Face, ONNX, and TorchScript, these models can be easily deployed across AWS, Azure, or Google Cloud, ensuring smooth transitions from research to production.

At Bnxt.ai, our AI specialists harness the power of PyTorch to design scalable computer vision solutions — from intelligent image classification systems to Generative AI models that drive real business impact.

An Overview of TensorFlow

What is TensorFlow? How It Powers Machine Learning and Computer Vision

TensorFlow is an open-source deep learning framework created by the Google Brain team. It is well regarded for use in machine learning contexts, computer vision tasks, natural language processing problems, and for the use of simple reinforcement policy models. It implements Static Computational Graphs and Eager Execution, allowing for a flexible interface for both researchers and production applications.

Some of the key features of TensorFlow are:

- TensorBoard: Provides visualisation for model metrics statistics, computational graphs, and performance tracking.

- TensorFlow Lite (TFLite): TensorFlow models optimised for mobile and embedded devices.

- TensorFlow Serving: Efficient deployment of models in production situations.

- GPU and TPU Acceleration: Increases the speed of deep learning model training.

Keras: A high level TensorFlow API designed to build Neural Networks rapidly. - TensorFlow Hub & Estimators: Pre- trained models and straightforward model building, these can significantly speed up model creation.

Installing TensorFlow:

- Standard installation

- pip install tensorflow

- GPU support (TensorFlow GPU)

- pip install tensorflow-gpu

- For mobile deployment using TensorFlow Lite:

- pip install tflite-runtime

TensorFlow in Practice

TensorFlow has powered many computer vision systems, including YOLOv8, Mask R-CNN, and MobileNet models for image recognition. Researchers at Google Brain and community collaborators on arXivLabs, CVPR, and NeurIPS frequently publish advancements using TensorFlow.

With TensorFlow.js, models can also run in JavaScript environments, and Google Cloud integration enables large-scale distributed computing.

PyTorch vs TensorFlow: A Comparative Analysis

Analyzing Performance Metrics in PyTorch and TensorFlow

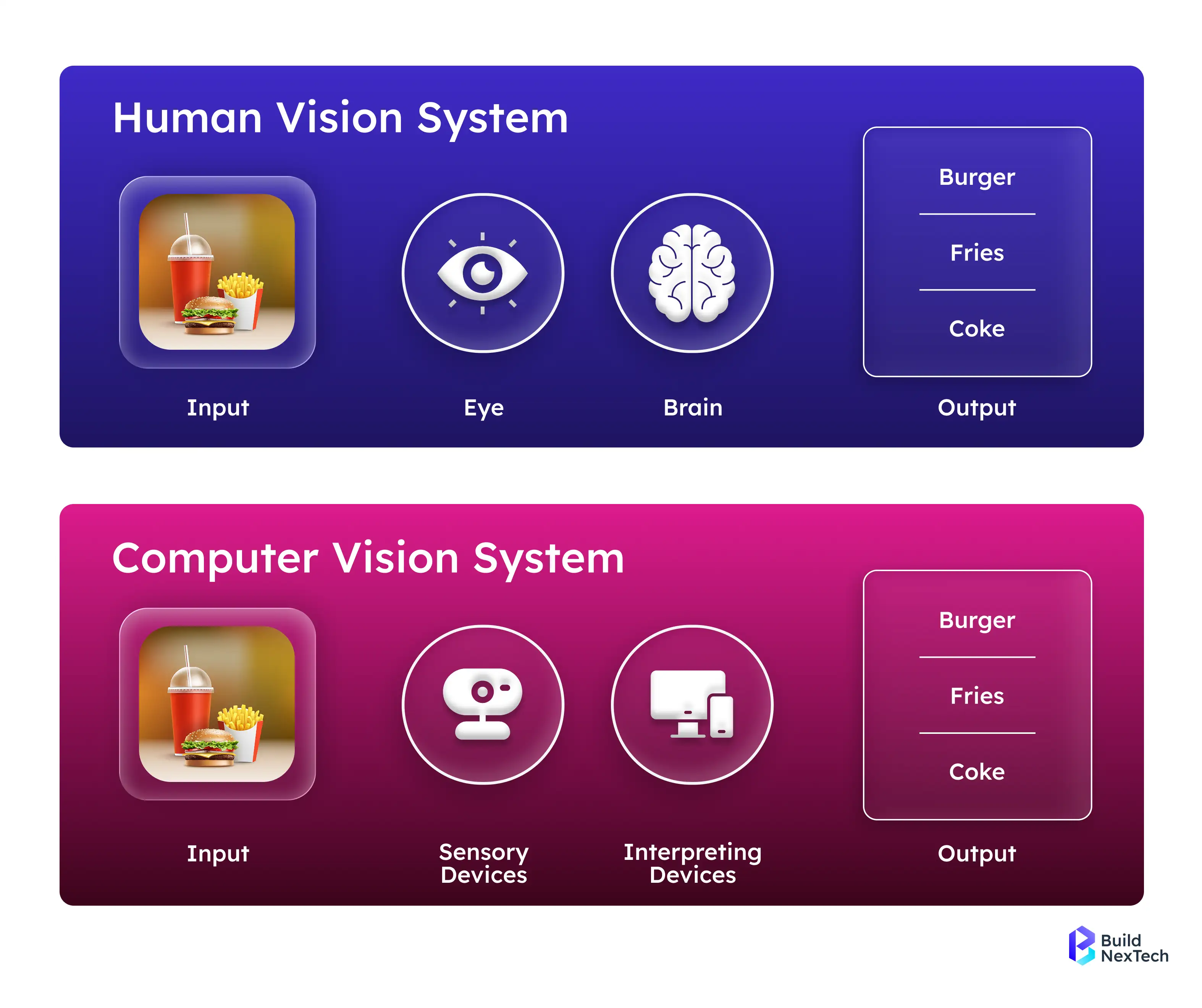

When comparing PyTorch and TensorFlow, performance can vary significantly depending on the hardware configuration, dataset size, and type of deep learning task being executed. Both frameworks are optimized for large-scale AI workloads but excel in different areas — research, experimentation, and production deployment. Some of them are:

- Speed of Training: Both frameworks utilize GPU acceleration, however, when paired with CUDA, the time between iterations may be shorter in PyTorch 2.0 vs TensorFlow when constructing deep learning models that are meant to be utilized for research purposes.

- Computational graphs: TensorFlow uses Static Computational Graphs. This allows optimised model deployment with tools like TensorRT. PyTorch uses Dynamic Computational Graphs. This gives more flexibility for complex models.

- Accuracy and Efficiency: Benchmarking studies on datasets like ImageNet and CIFAR-10 show minimal accuracy differences between PyTorch and TensorFlow. PyTorch excels in rapid prototyping and research, while TensorFlow leads in scalability and production-grade deployment.

- Real-world Example: Meta AI uses PyTorch for models like Segment Anything and DINOv2, whereas Google relies on TensorFlow for TPU-powered systems such as Google Photos and YouTube recommendations.

Many studies have compared the accuracy of each framework using common datasets like ImageNet, CIFAR, and Imagenette. These studies show the accuracy differences are very small. However, utilising PyTorch has proven much more efficient when it comes to rapid model prototyping.

Community Support in both frameworks:

- PyTorch: PyTorch is very approachable for beginners and the simplest framework to learn - this is primarily due to its Pythonic syntax, AutoGrad, and available tutorials. Through PyTorch Hub, HuggingFace, and various collaborations with NeurIPS/CVPR, PyTorch has also garnered a considerable amount of support from the community.

- TensorFlow: TensorFlow tends to have a somewhat steeper learning curve, but it offers you TensorBoard, Keras preprocessing layers, and TensorFlow Hub. TensorFlow now has far more industry adoption and multiple enterprise support options from Google.

Ideal Use Cases

When deciding which framework to use, the right choice depends largely on your project goals, deployment needs, and team expertise.

When using either framework, PyTorch is ideal for:

- Research projects requiring fast experimentation.

- More difficult Generative AI, either through autoencoders or GANs.

- Reinforcement learning and dynamic flexible models.

When using either framework, TensorFlow is preferable for:

- Production - especially models that require scalability.

- Mobile/embedded environments through TensorFlow Lite (TFLite).

- Integration with GCE and TPUs to run large-scale distributed training.

💡Expert Tip: Companies like bnxt.ai help teams choose, integrate, and optimize these frameworks—bridging the gap between research-grade models and enterprise deployment.

Conclusion

TensorFlow and PyTorch are powerful deep learning frameworks with rapidly growing community support and contributors. Both frameworks provide GPU acceleration for efficient model training and provide specialized libraries for computer vision applications. PyTorch, in particular, is popular with the research community because of its Dynamic Computation Graphs, which make prototyping deep learning models and architectures for Generative AI very quick.

TensorFlow is the preferred framework for production use, especially since it offers systems for distributed training and deployment on mobile devices, for example, by using TensorFlow Lite. For an AI engineer focusing on experimentation and research, PyTorch will be a better option, while for scalability for deployment into an industry setting, TensorFlow will be ideal.

Incidentally, developers can combine both frameworks (e.g., using frameworks of TorchVision, TorchServe, TensorFlow Serving, Keras, TensorBoard) to build strong AI, deep learning, and Generative AI systems. Want to implement these frameworks for your business? The AI team at Bnxt.ai helps organizations accelerate innovation through advanced deep learning and computer vision development.

People Also Ask

What is the best framework for computer vision?

Both PyTorch and TensorFlow are excellent choices — PyTorch offers flexibility for research, while TensorFlow excels in production scalability.

Can I use PyTorch for production-level applications?

Yes, PyTorch supports production deployment through TorchServe and PyTorch Lightning, making it reliable for real-world use.

How do PyTorch and TensorFlow differ in terms of model training?

PyTorch uses dynamic computation graphs for flexibility, while TensorFlow uses static graphs for optimized performance.

What are the advantages of using TensorFlow Lite for computer vision?

TensorFlow Lite enables fast, lightweight inference on mobile and edge devices, ideal for on-device computer vision tasks.

.webp)

.webp)

.webp)